OS.bee Documentation for Designer

Contents

- 1 OS.bee Documentation for Designer

- 1.1 Get Started

- 1.1.1 Pitfalls with new Eclipse installations

- 1.1.2 Eclipse Installation / Installation SWF / New Project from GIT

- 1.1.3 cvs2app - question regarding ENUM types

- 1.1.4 Launch Application from Eclipse (very slow)

- 1.1.5 Structure of the documentation page

- 1.1.6 Setup Foodmart MySQL database and data--PART1

- 1.1.7 Setup Foodmart MySQL database and data--PART2

- 1.1.8 Setup Foodmart MySQL database and data--PART3

- 1.1.9 Working with the H2 DB

- 1.1.10 Performance tuning for MySQL databases

- 1.2 Modeling

- 1.2.1 Display of article description

- 1.2.2 Connecting different database products

- 1.2.3 Default Localization

- 1.2.4 CSVtoApp ... Column limitation?

- 1.2.5 Entering a number without keypad

- 1.2.6 Missing bundles after update and how to solve it

- 1.2.7 Creating CSV files as input for AppUpIn5Minutes with OS.bee

- 1.2.8 import declartion used in most DSL

- 1.2.9 Entity DSL (DomainKey DomainDescription)

- 1.2.10 assignment user -> position

- 1.2.11 I18N.properties (Reorganization of obsoleted values)

- 1.2.12 Display values from ENUMS in Table

- 1.2.13 References to images

- 1.2.14 Perspective with master/slave relationship

- 1.2.15 Perspective use border to show boundary for each sash

- 1.2.16 Datainterchange Export (does not overwrite existing file)

- 1.2.17 Logged in user as part of the data-record

- 1.2.18 bpmn2 file reference in blip-DSL

- 1.2.19 How to add the missing icon images to a new created ENUM combo box

- 1.2.20 table DSL (as grid) create new data records

- 1.2.21 DSL entity (Multiplicity of properties)

- 1.2.22 UI-Design

- 1.2.23 DSL Dialog: Autosuggestion for DomainKey or DomainDescritpion

- 1.2.24 DSL Table

- 1.2.25 datamart DSL (condition filtered) table does not refresh

- 1.2.26 DSL authorization (CRUD)

- 1.2.27 DSL Menu(UserFilter/SuperUser)

- 1.2.28 Printer Selection (Default Printer)

- 1.2.29 DSL Entity Enum with own images

- 1.2.30 How to synchronize views

- 1.2.31 How to get new features from your toolbar

- 1.2.32 Add user information to your CRUD operations

- 1.2.33 Designing dialogs using multiple columns

- 1.2.34 Grouping attributes on dialogs

- 1.2.35 How units of measurements are handled

- 1.2.36 How sliders can improve the user experience with your dialogs

- 1.2.37 New ways to supply icons for enum literals

- 1.2.38 Validation

- 1.2.39 Extended Validation

- 1.2.40 Reset cached data

- 1.2.41 ReportDSL: How to get a checkbox for a Boolean attribute

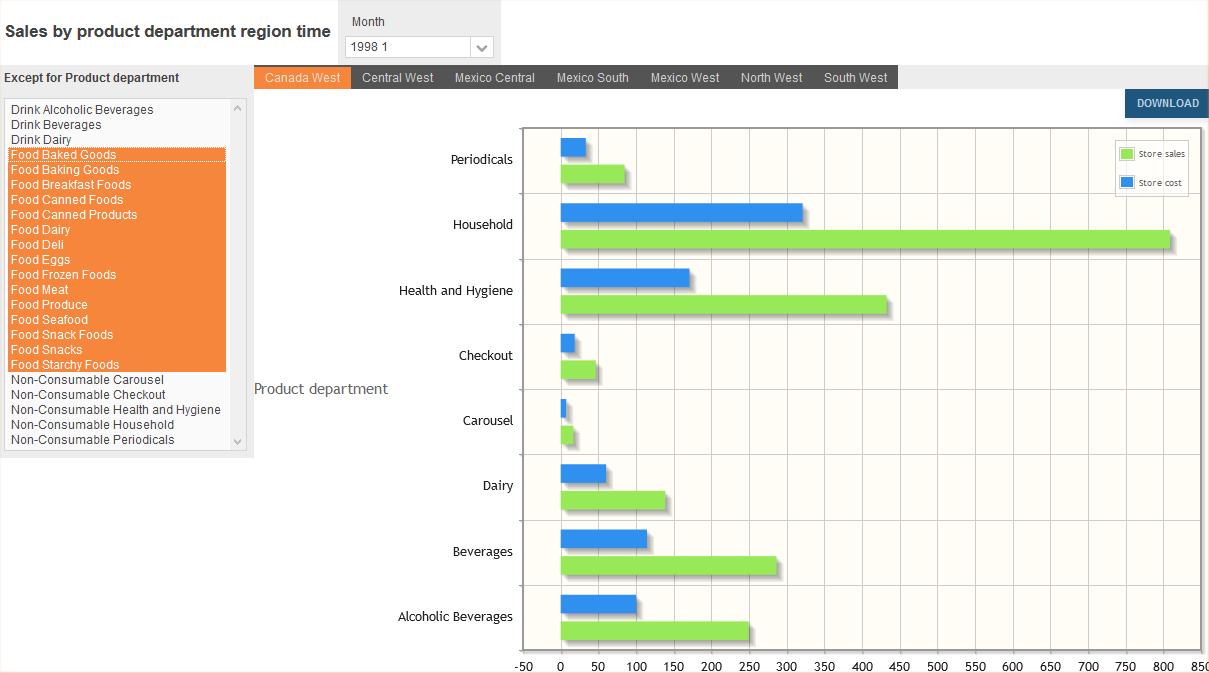

- 1.2.42 How to collect business data and presenting meaningful statistics with OS.bee - INTRODUCTION

- 1.2.43 How to collect business data and presenting meaningful statistics with OS.bee – PART1

- 1.2.44 How to collect business data and presenting meaningful statistics with OS.bee – PART2

- 1.2.45 How to collect business data and presenting meaningful statistics with OS.bee – PART3

- 1.2.46 How to collect business data and presenting meaningful statistics with OS.bee – PART4

- 1.2.47 Surrogate or natural keys in entity models?

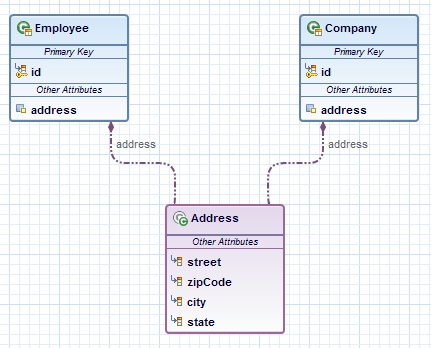

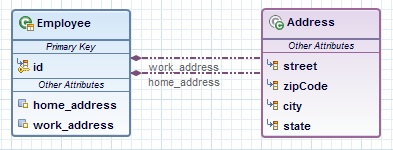

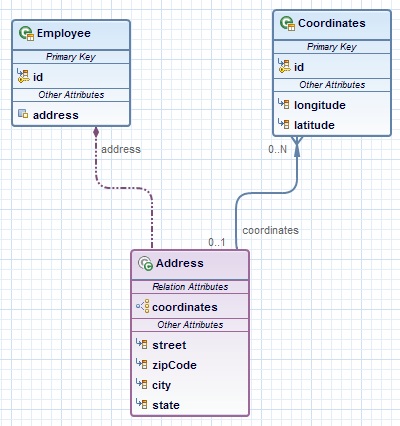

- 1.2.48 Using "embedded" entities

- 1.2.49 Improve toolbar functionality

- 1.2.50 Fill a new DTO entry with default values

- 1.2.51 Prevent visibility or editability in general

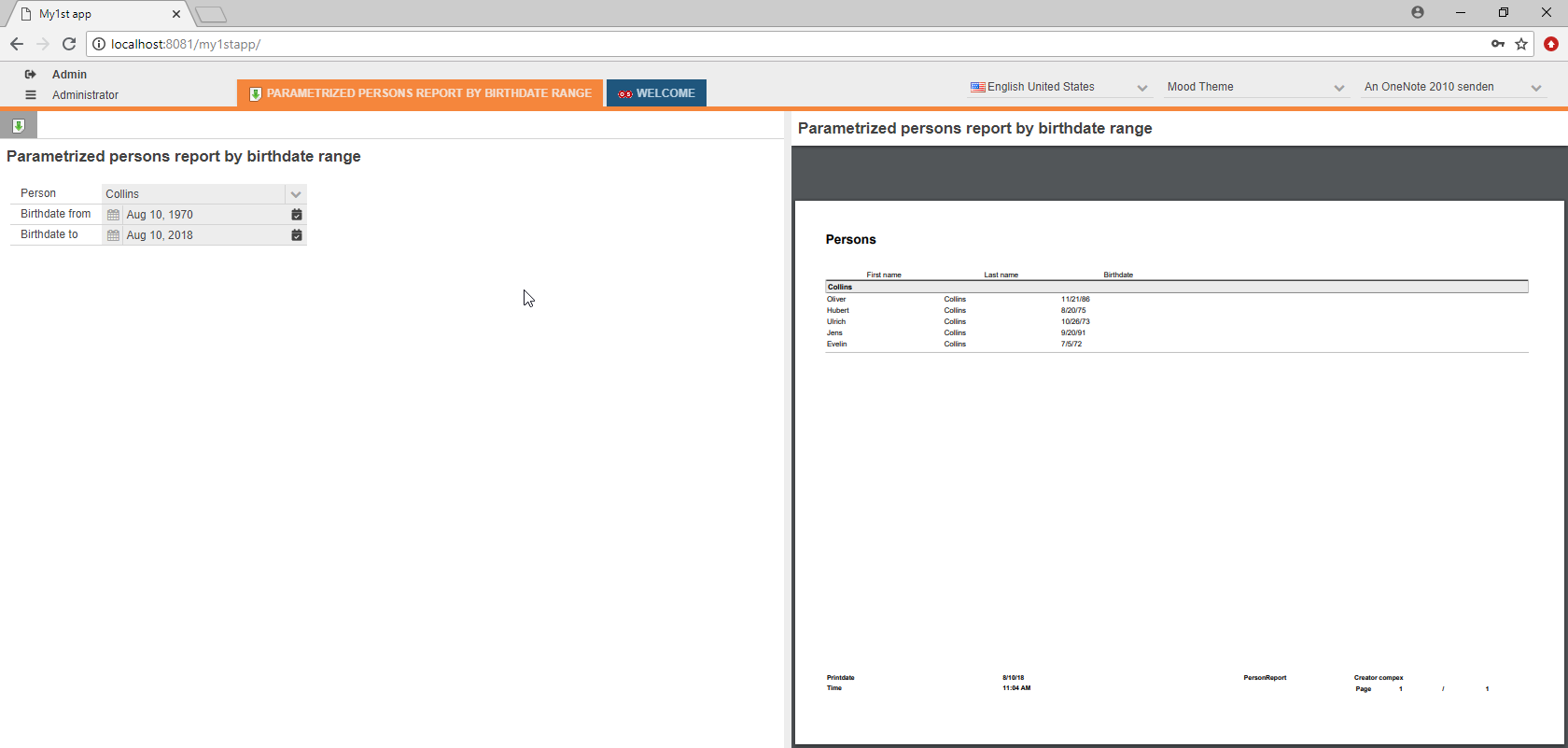

- 1.2.52 Parameterized Report

- 1.2.53 How to manage generated numbers with OS.bee

- 1.2.54 Enter new data using a "sidekick"

- 1.2.55 Faster development on perspectives

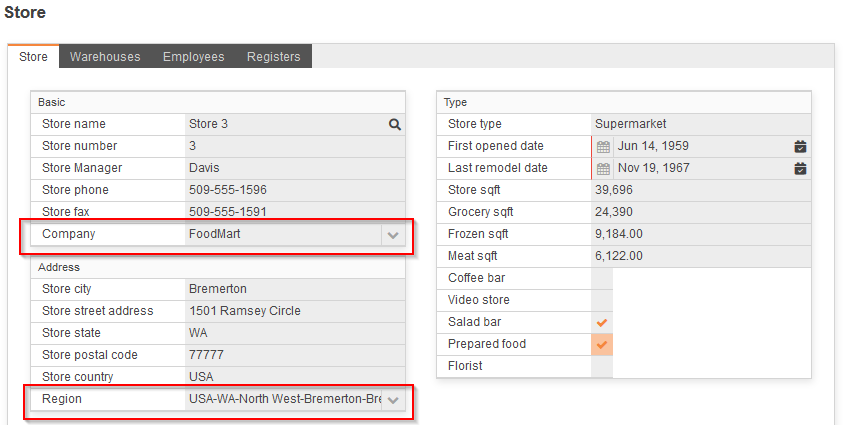

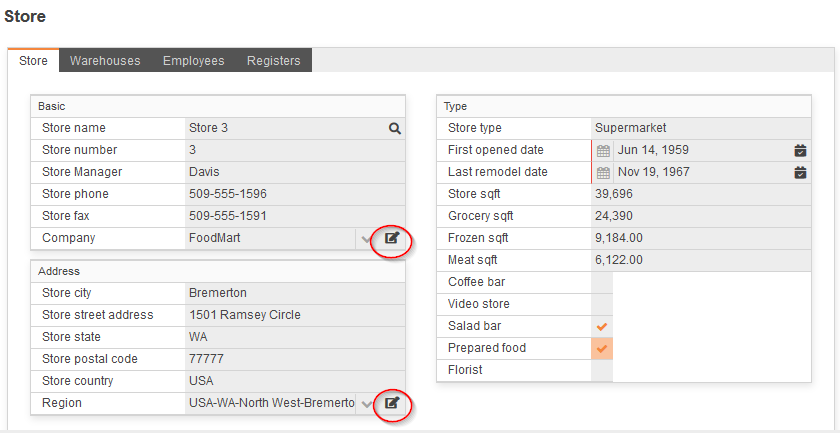

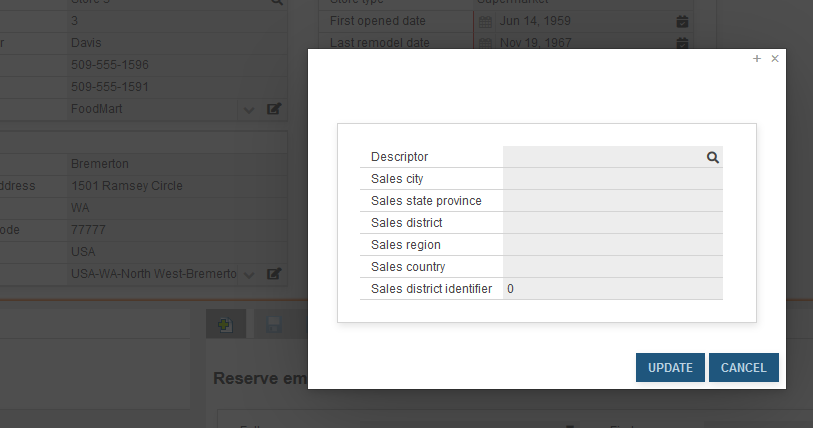

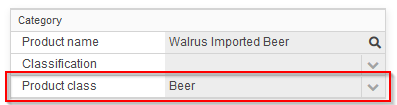

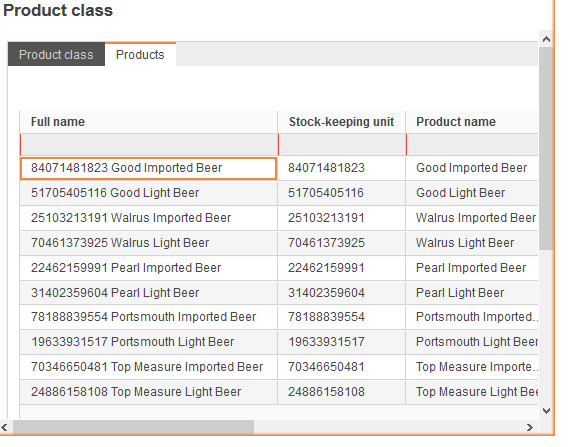

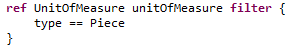

- 1.2.56 How to filter references

- 1.2.57 Enumerations as converters

- 1.2.58 State-Machines, UI, REST Api and free programming

- 1.2.59 Execute something by pressing a toolbar button

- 1.3 Core Dev

- 1.3.1 New event for statemachines

- 1.3.2 Duplicate translations

- 1.3.3 Edit WelcomeScreen

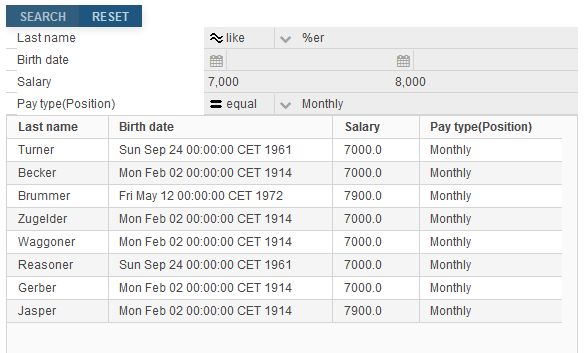

- 1.3.4 Search

- 1.3.5 DataInterchange is externally configurable by admins

- 1.3.6 Statemachine (FSM) handles external displays

- 1.3.7 Database Support

- 1.3.8 Combo box handles now more complex objects

- 1.3.9 Enriching csv files to highlight complex information

- 1.3.10 Max File Size BlobMapping

- 1.3.11 How to show JavaScript compile errors building the Widgetset

- 1.4 Apps

- 1.1 Get Started

- 2 Copyright Notice

OS.bee Documentation for Designer

Here are frequently asked questions from designers which are not mentioned by other Documentation. In this Page, you could find the answer to your question.

Get Started

Pitfalls with new Eclipse installations

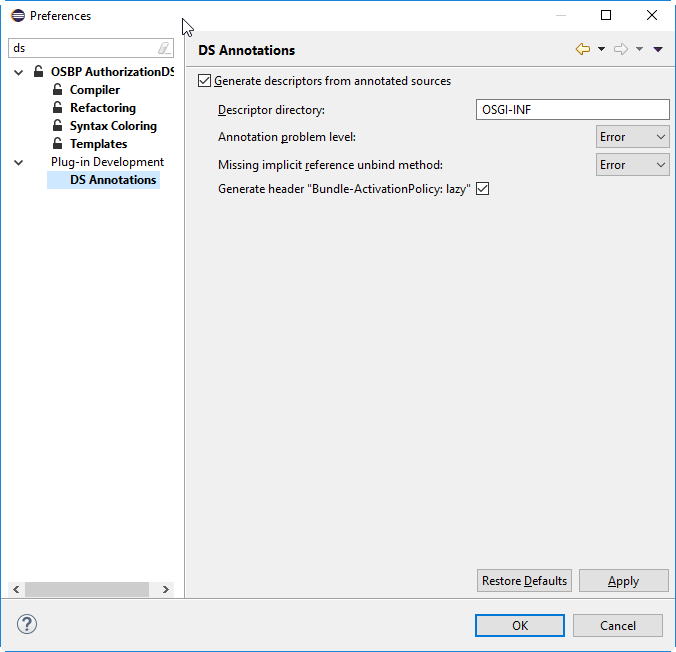

Be aware that when installing a new Eclipse environment to look at the preferences for DS Annotations and check the box "Generate descriptors from annotated sources" as OS.bee makes heavy use of automatically generated component descriptors in the OSGI-INF directory. It is unchecked by default for incomprehensible reasons.

Don't forget to set your target platform correctly as described in the installation guide.

Eclipse Installation / Installation SWF / New Project from GIT

Question:

Using Eclispe Neon, execution of Installation Software-Factory as described in the Installation notes, Connect to a GIT Archiv, After Building Workspace the application is not valid (see Screen-Shot) Try to clean the Project was not successful.

Answer:

The version of the installed Software Factory and the version needed for the project do not match.

Please install the appropriate Software Factory version.

cvs2app - question regarding ENUM types

Question:

cvs2app is mentioned in the documentation "App up in 5 minutes" and gives the possibility to create one app directly out of the csv file. One of the first steps is to create an entity, which is generated based on the information on the first line (which contains the column names). In consequence I have 2 questions regarding ENUMS:

- Is it possible to create an entity ENUM out the csv - FILE?

- Is it possible to use an existing ENUM entity during the creation of the app?

For example:

in the entity there is already a definition:

enum type_ps {

PROCESS_DESCRIPTION, ORGANISATIONAL

}

and the csvfile looks as follows:

ticket_type_number;ticket_type_description;ticket_type_ps_type

1;CRS handling;PROCESS_DESCRIPTION

2;Administrative;ORGANISATIONAL

3;Delivery package;ORGANISATIONAL

4;Software behaviour;PROCESS_DESCRIPTION

Answer:

Yes it is possible. When using the latest version (from feb 2018), it is possible to supply various meta-information to each column. One meta-info is the hint to the application builder that this column is meant to be a ENUM. By default it wouldn't be possible to guess that fact.

Launch Application from Eclipse (very slow)

Question:

When starting the Application from within the Eclipse it took very long time until the application is up. Are there some settings to be controlled?

Answer:

If you experience very slow performance with Eclipse itself as well as the application you launch from Eclipse it might be a good idea to check the virus scanner you have installed. Some virus scanners check all the files inside the Eclipse installation directory, the Eclipse workspace and the GIT repository which might lead to extreme slow performance. Ask your Administrator how to avoid this.

Structure of the documentation page

In the OS.bee Software Factory Documentation page, there are 3 Headlines used to structure the page:

- OS.bee DSL Documentation

- Other OS.bee-Specific Solutions

- OS.bee Third-Party Software Solutions

At the end of Chapter one there are some helpful hints to work with eclipse. To start with Eclipse and the SWF these hints could be very useful.

- One more hint: to Use STRG-Shift-O to organize the import inside the DSL.

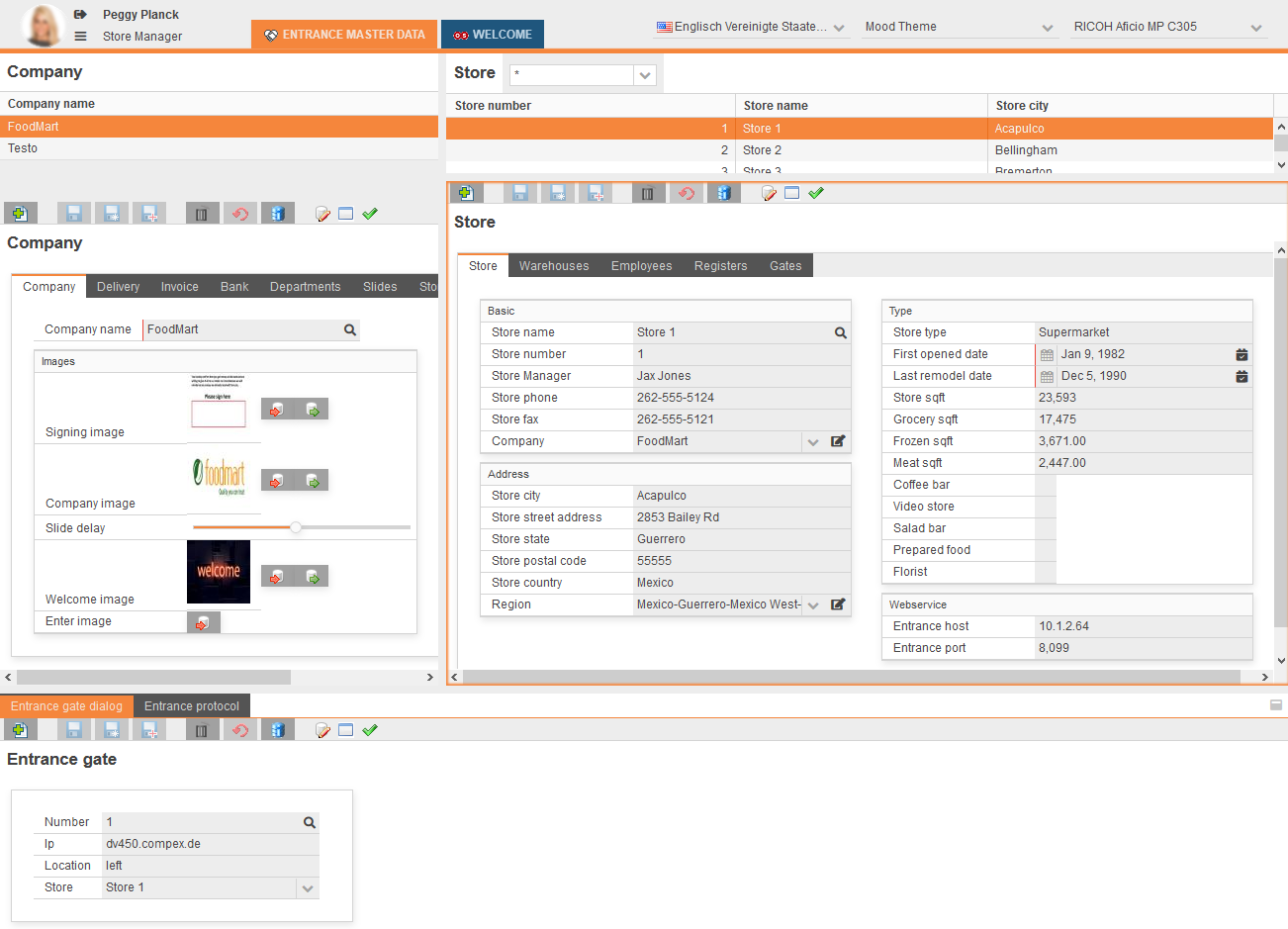

Setup Foodmart MySQL database and data--PART1

Foodmart is a example application where all important modelling use-cases are used and where they can be tested. Foodmart data and entity-model was derived from the famous example of Mondrian Pentaho.

This is a short introduction about how to configure a MySQL database and import Foodmart-data.

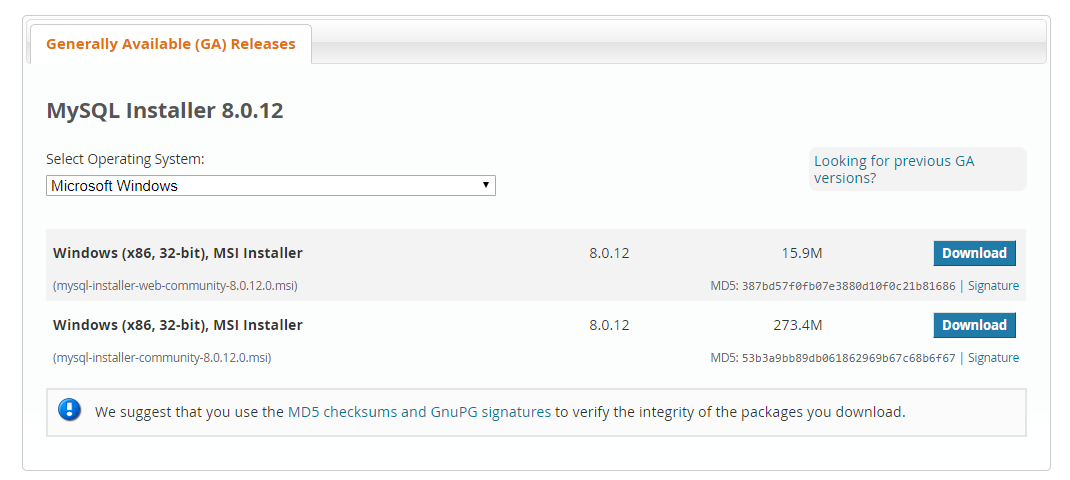

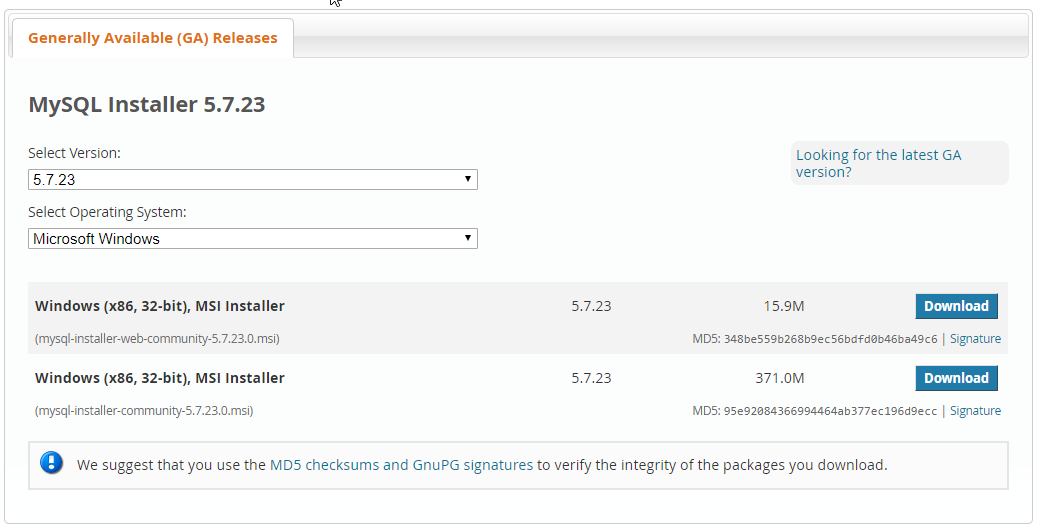

First of all you have to install an MySQL Server. This introduction refers to version 5.7 of MySql for Windows.

So you have to select "Looking for previous GA versions?" and will get this screen:

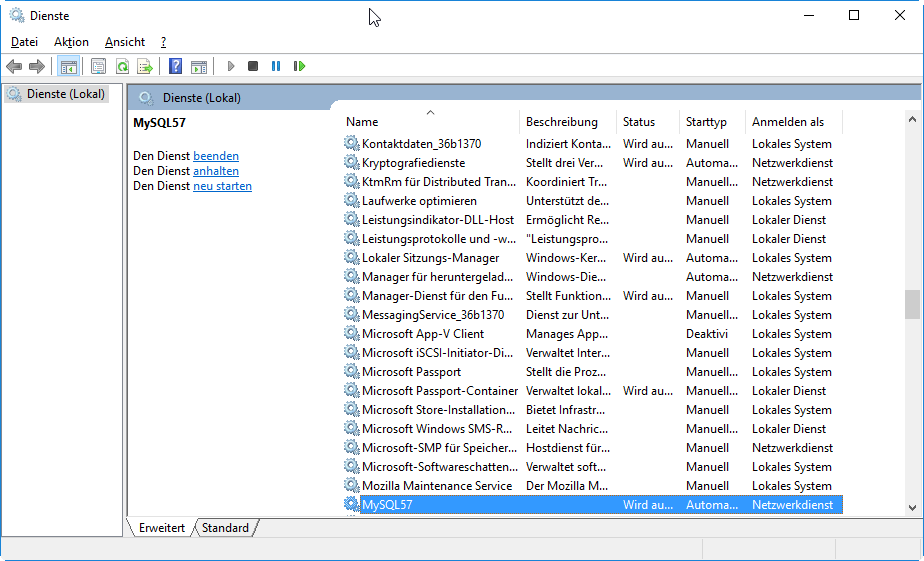

Download the mysql-installer-community-version and follow the instructions of this installer. After successful installation you'll have a new service:

If not already running, start the MySQL57 service or reboot your machine.

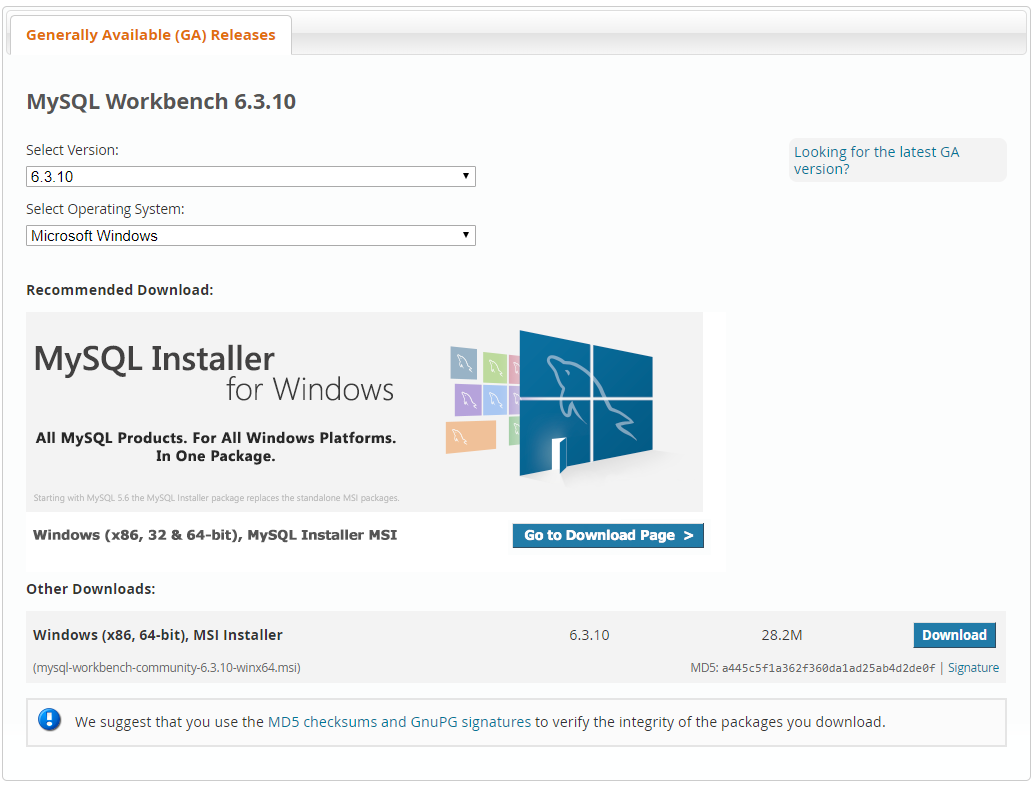

Then you install MySQL Workbench. As we use an older version here you must select "Looking for previous GA versions?" and you'll get this screen:

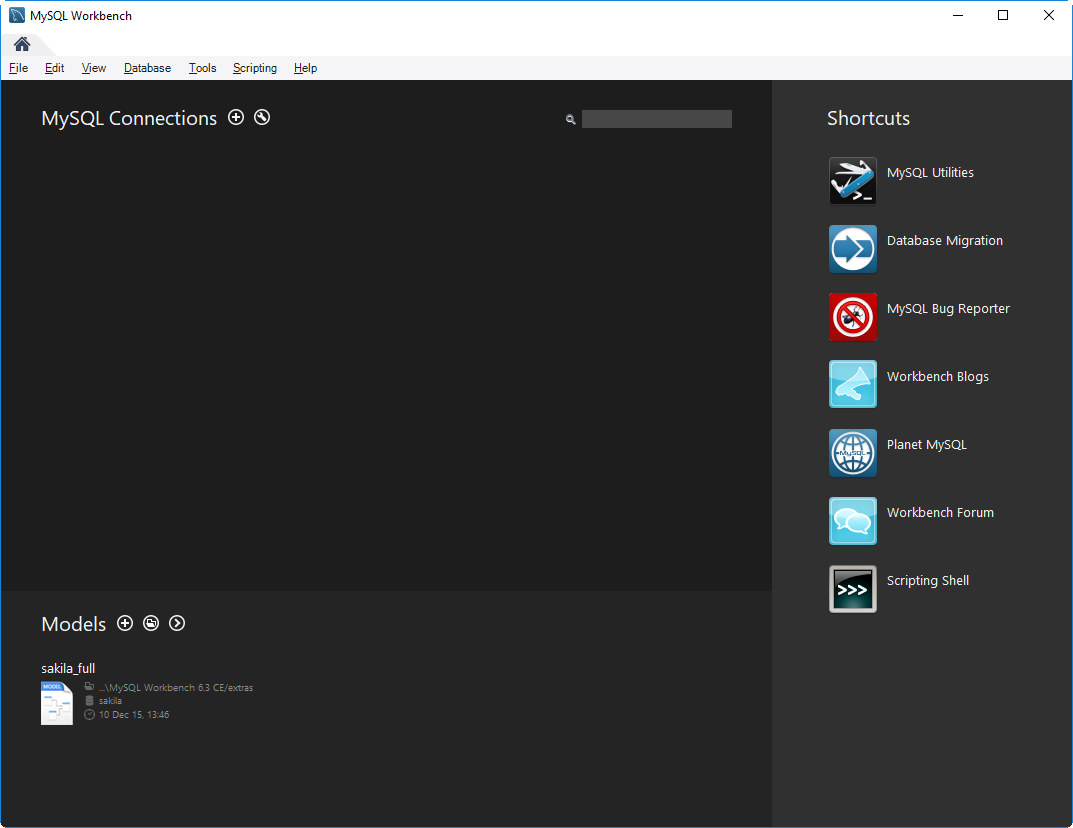

Download and install the workbench. After successful installation, open the workbench and create a new connection by clicking the + symbol:

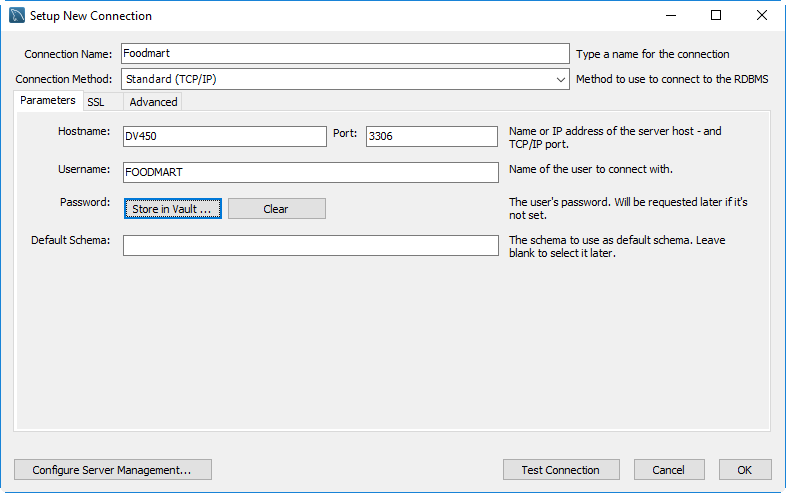

Create a new connection like this:

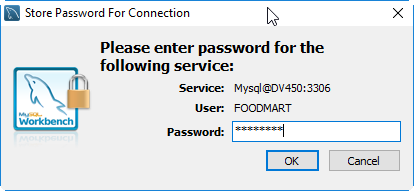

Store the password "FOODMART" in capitals in Vault:

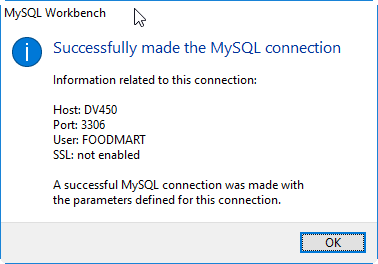

Test the connection:

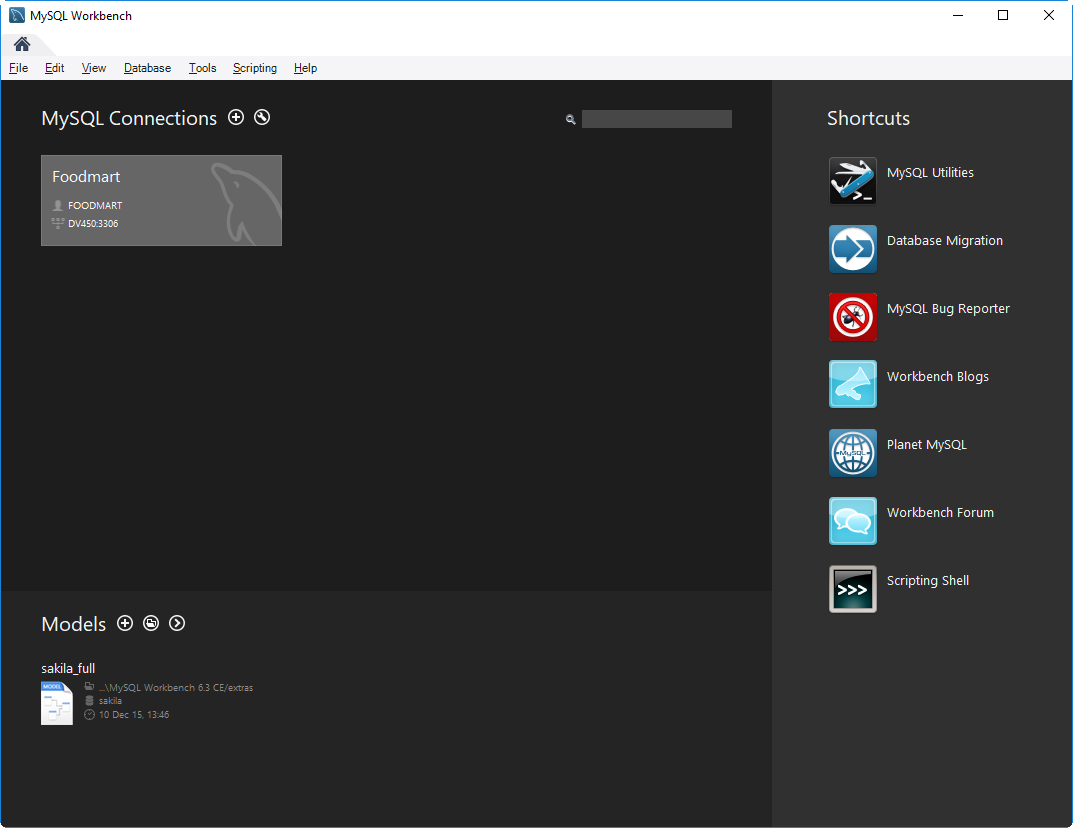

Your workbench should look like this afterwards:

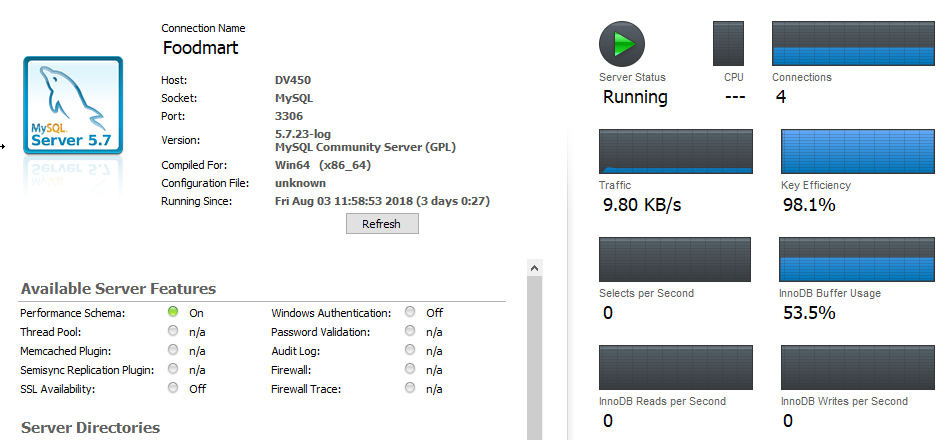

After you clicked on Foodmart (which is the name of your connection here), the workbench opens with the navigator an you can check the server status:

Setup Foodmart MySQL database and data--PART2

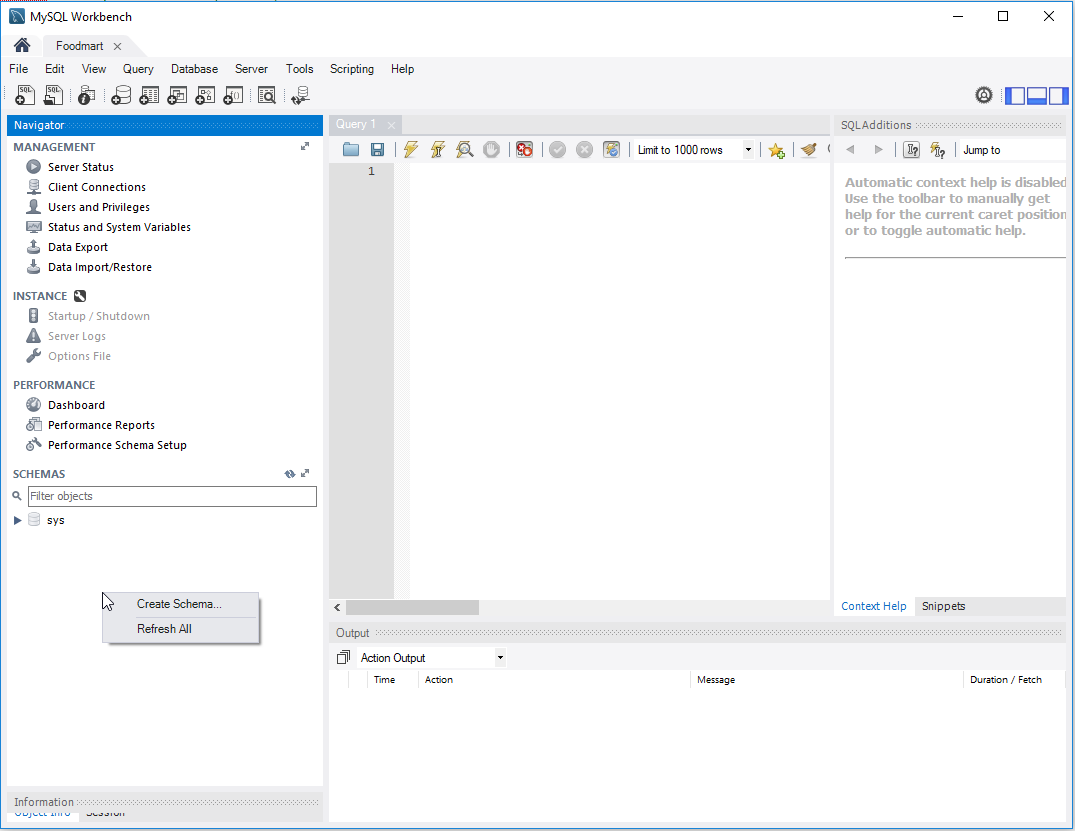

After your Server ist setup, right click in the SCHEMAS area of the Navigator and create new schemas:

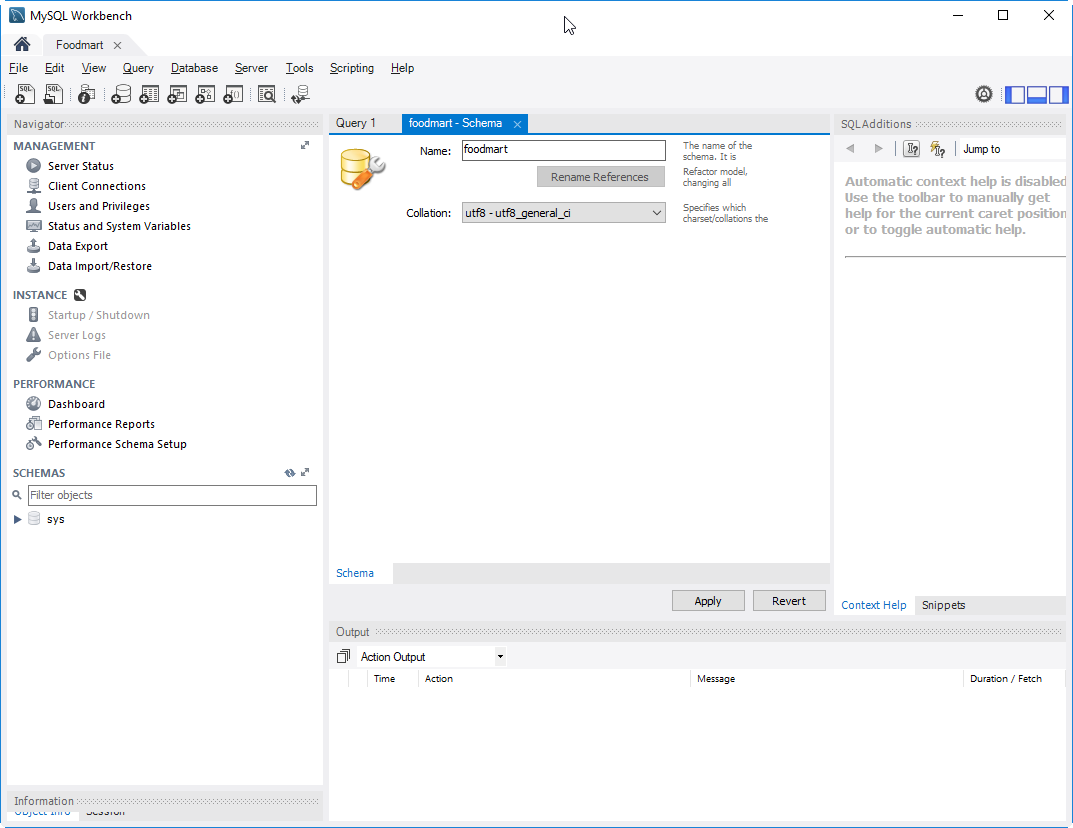

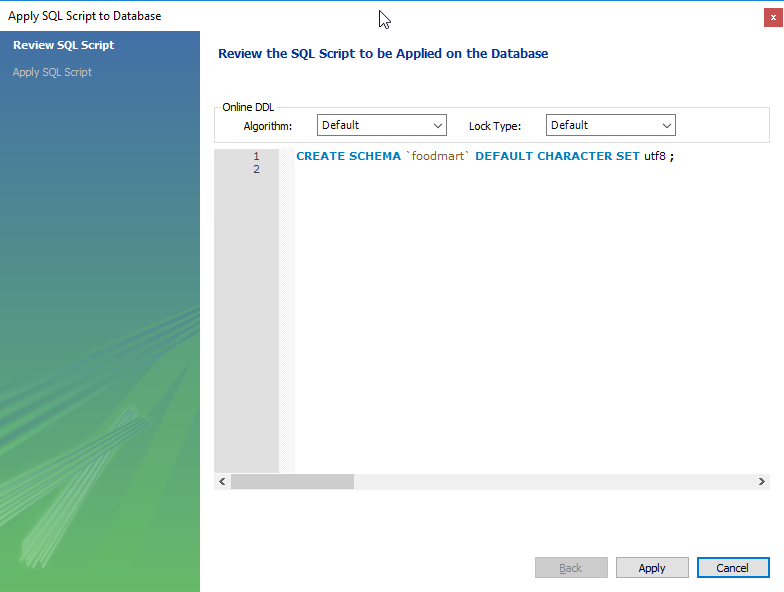

You create a schema named foodmart which is your database later on. Don't forget to select utf8 encoding like shown here:

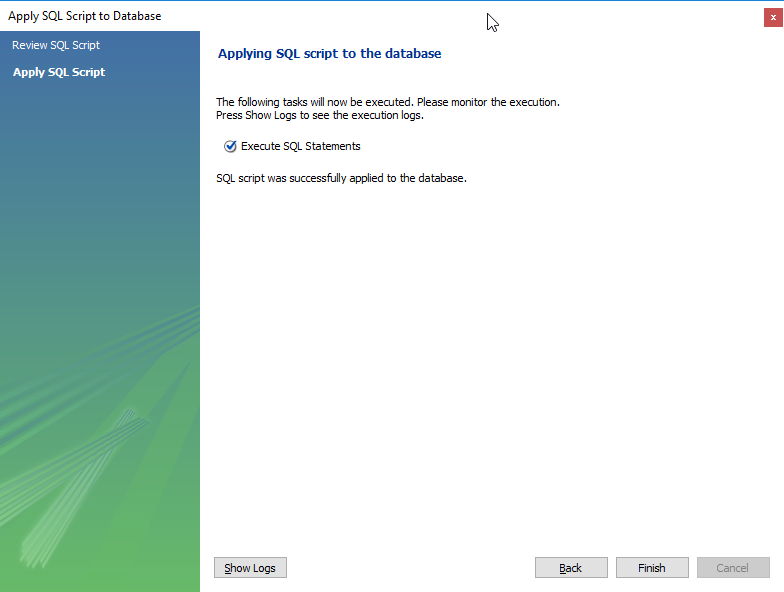

Follow the steps:

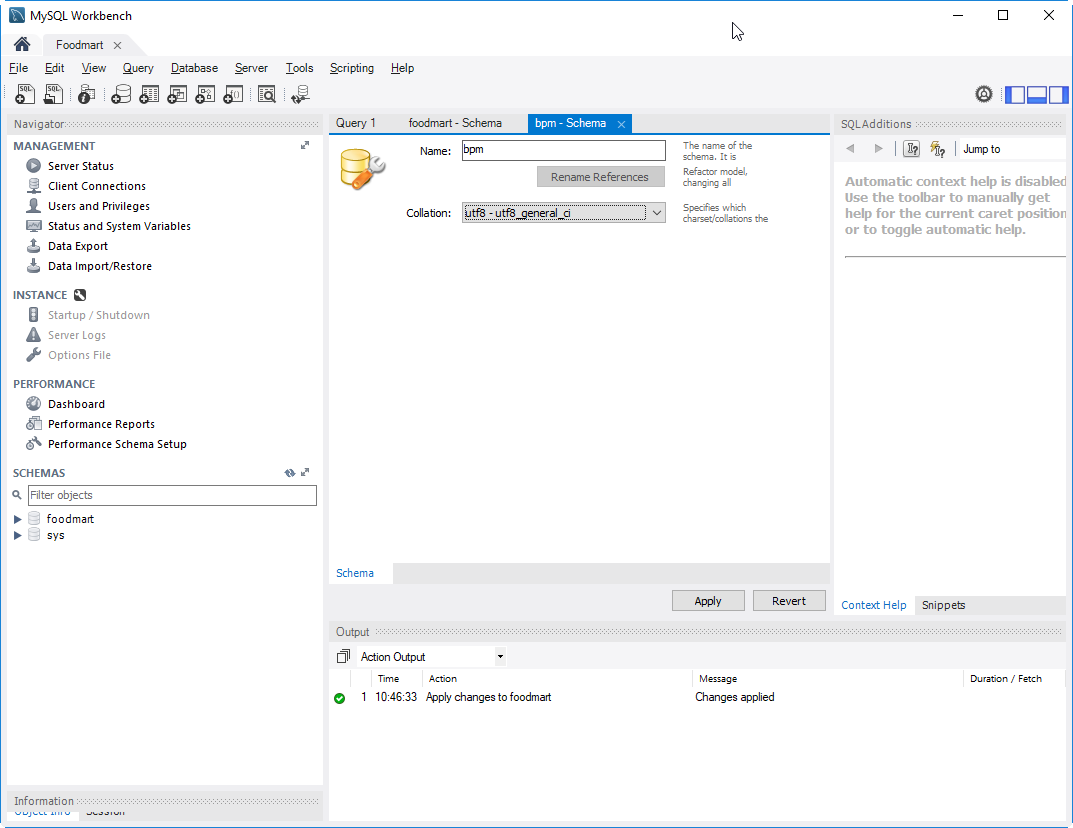

Also create a bpm schema and follow the steps described before:

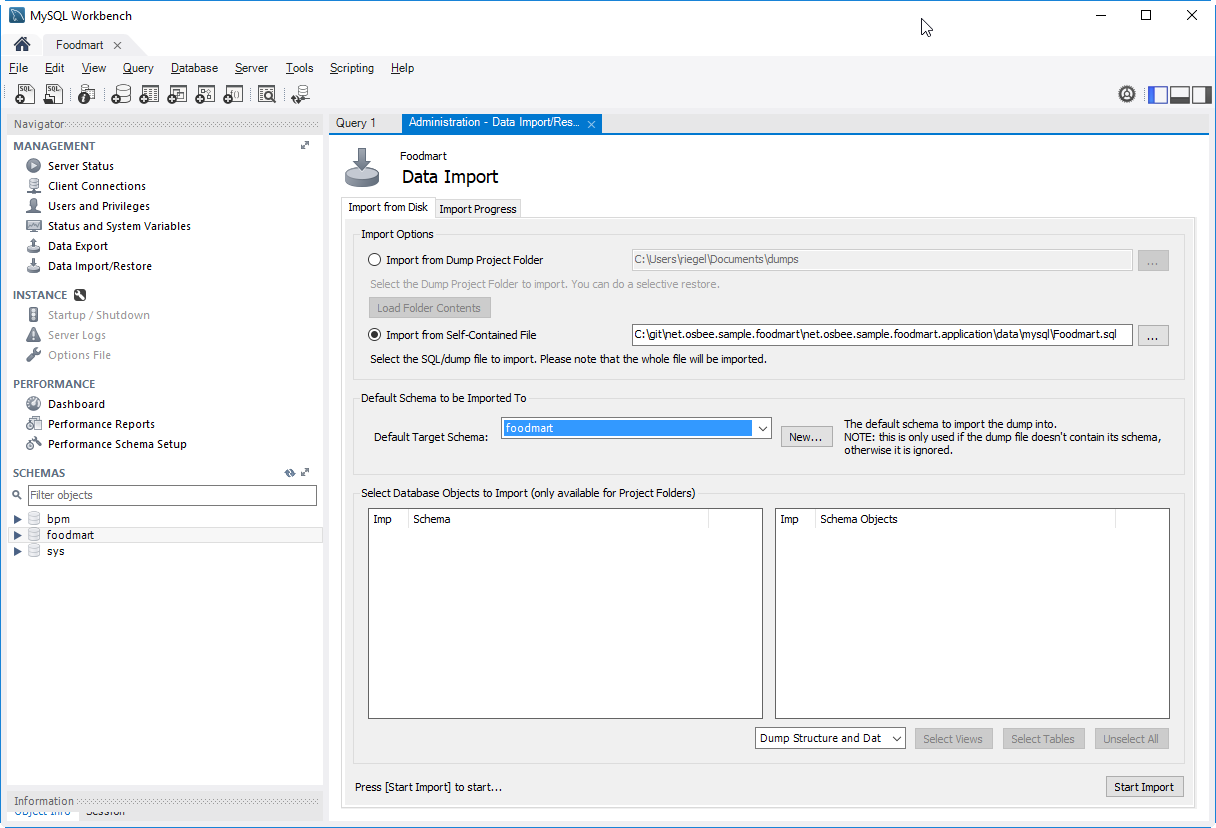

Now you can start the data import with Server -> Data Import:

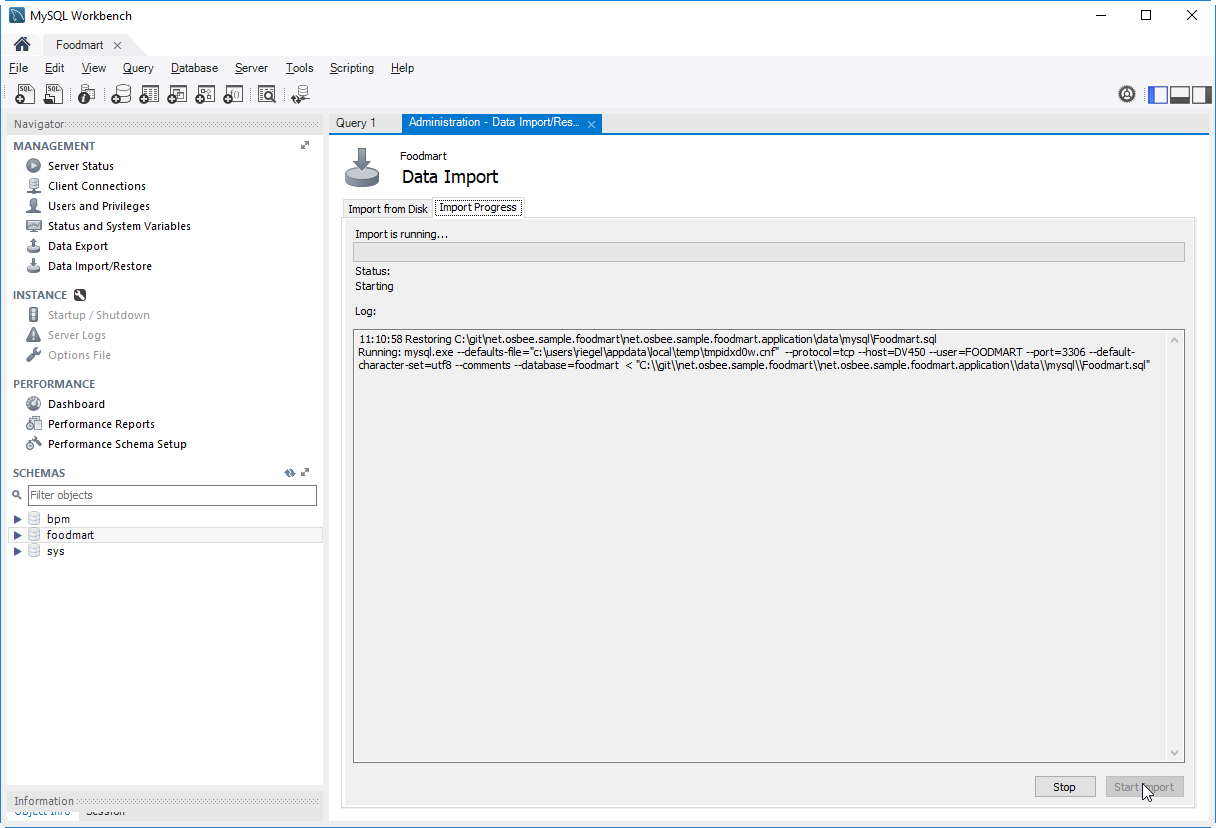

Press "Start Import":

Now the database foodmart is filled with the appropriate data.

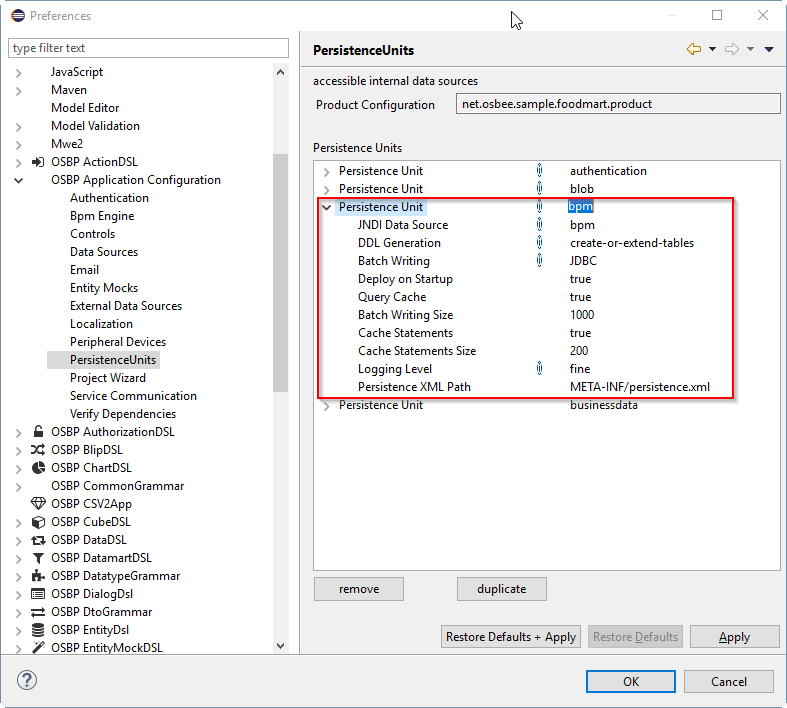

Setup Foodmart MySQL database and data--PART3

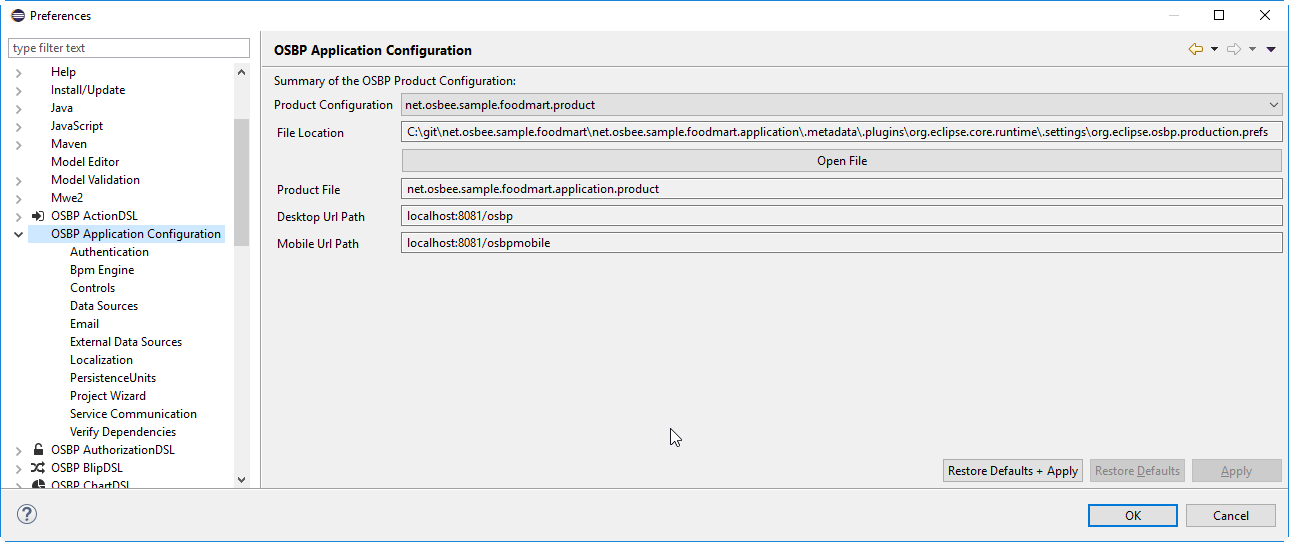

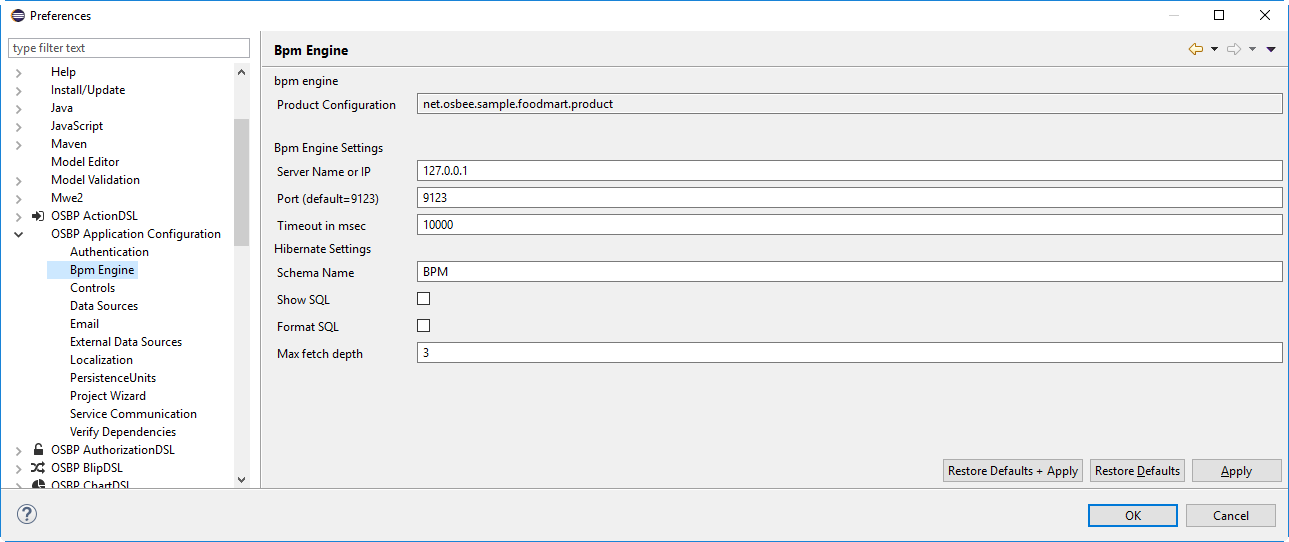

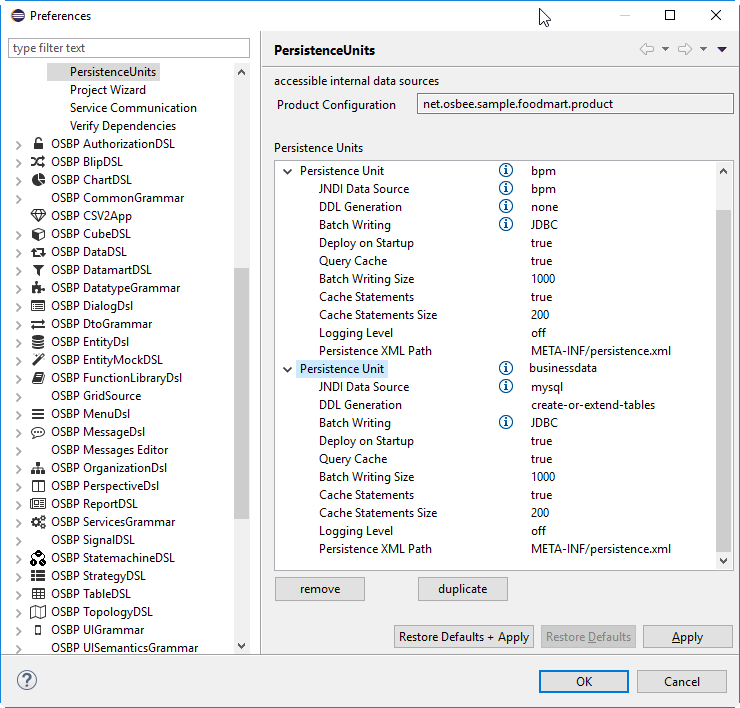

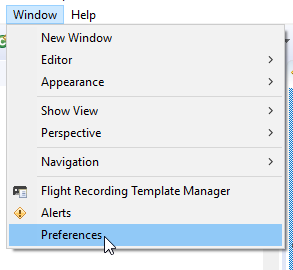

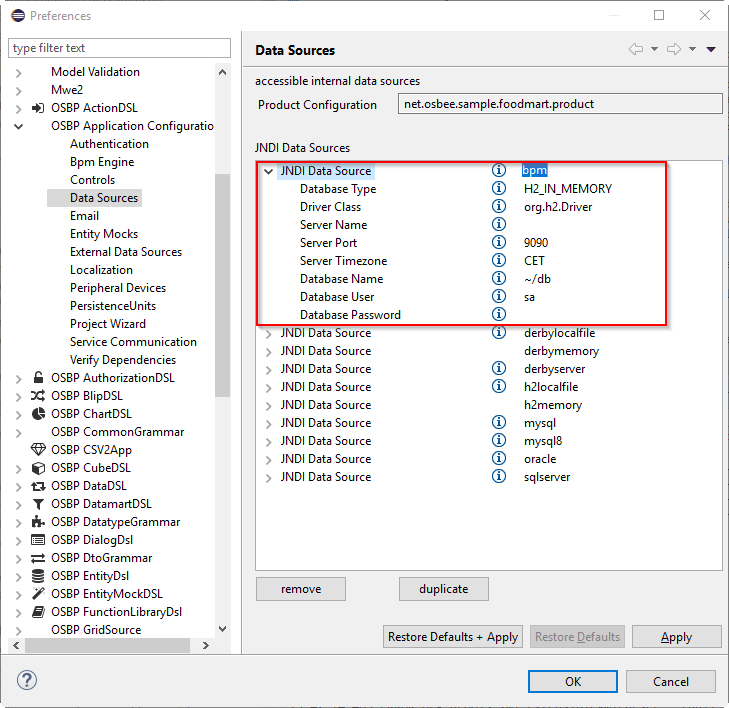

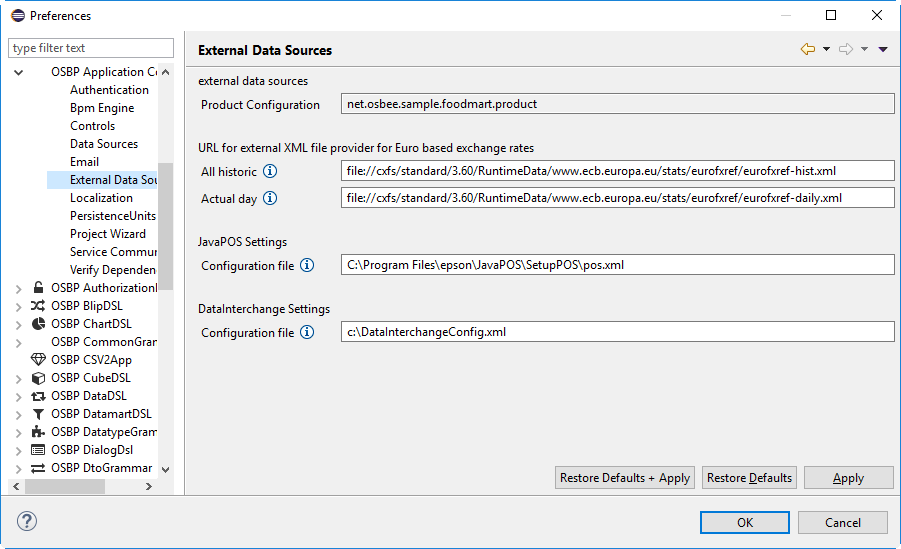

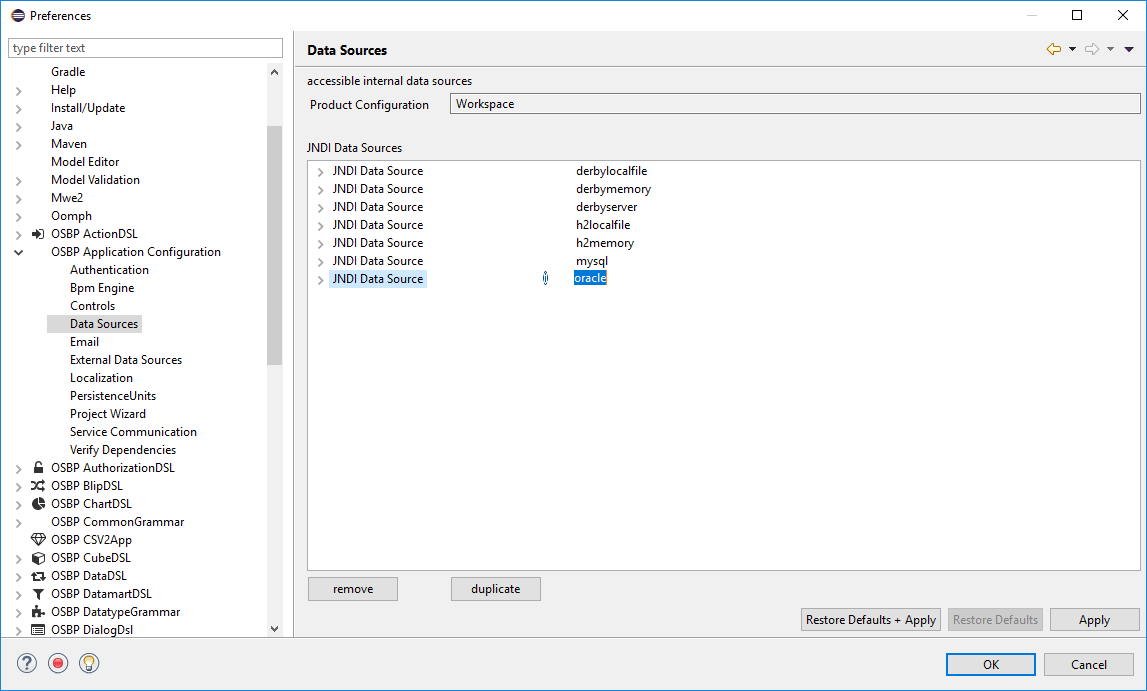

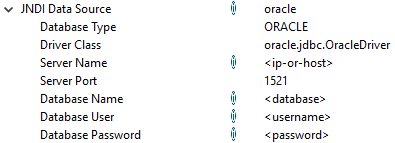

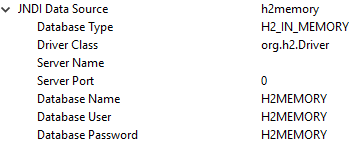

After the database foodmart is filled, there are some settings to change for the first start of OS.bee with foodmart data. In your IDE open Window->Preferences->OSBP Application Configuration:

Double check whether you selected the product in the configuration and NOT the workspace. Check the database name for the BPM settings to be BPM so it matches the MySQL database settings.

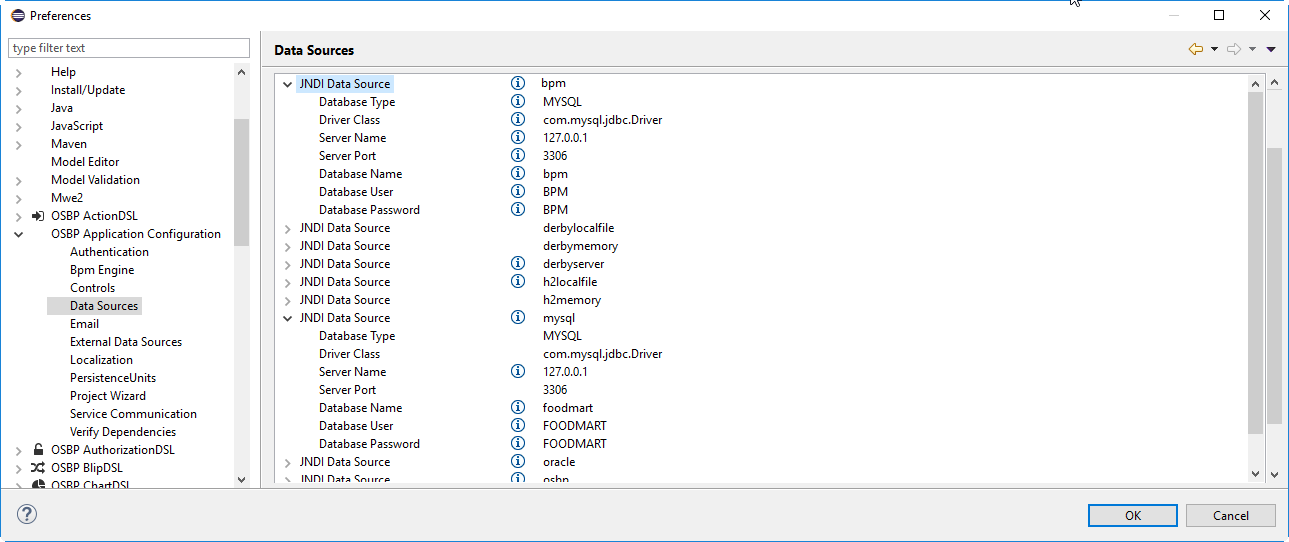

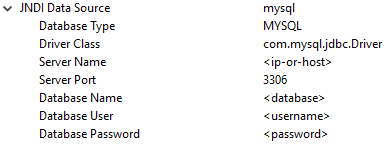

Adjust the JNDI Data Source settings so that bpm and mysql have the right parameters:

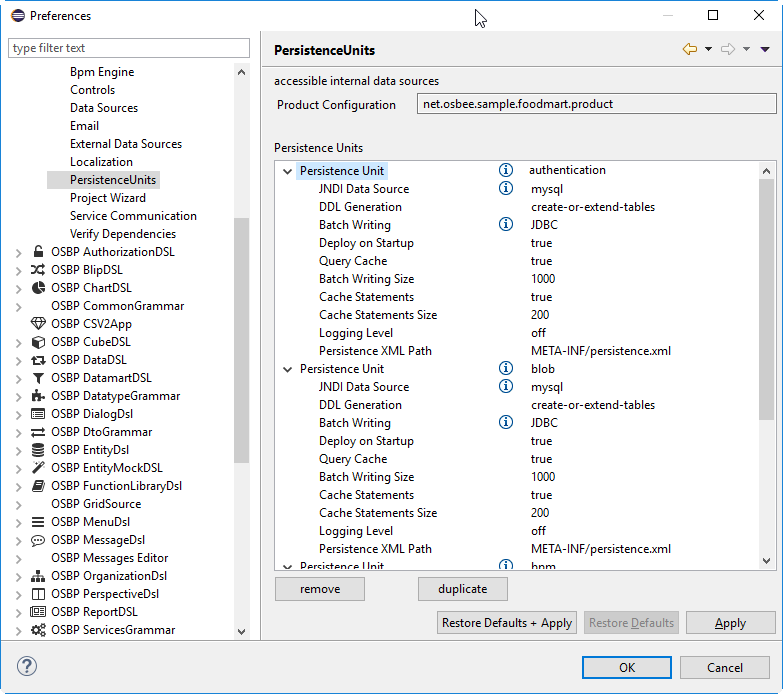

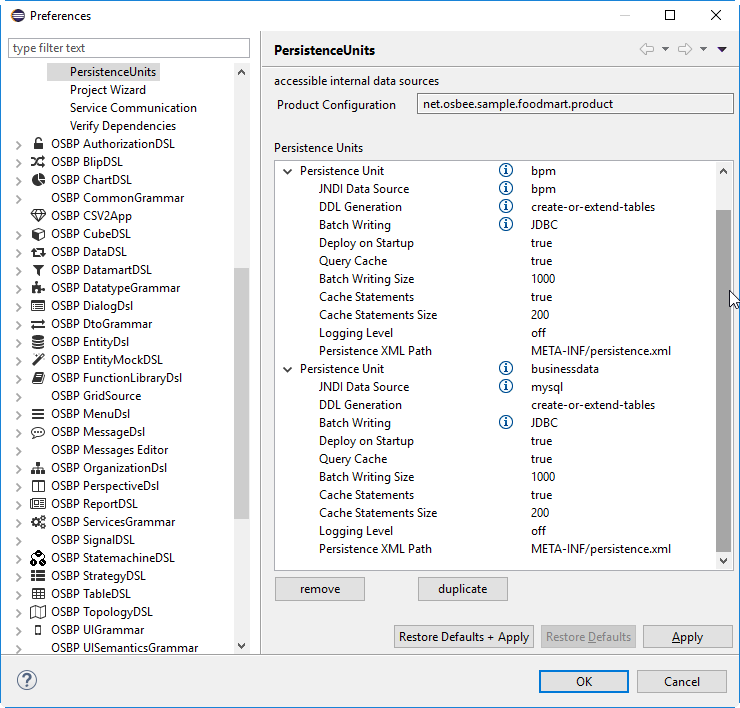

There are 4 different Persistence Units that must be configured for OS.bee:

- authentication

- blob

- bpm

- businessdata

They must look like this for MySQL:

For the first start you must force BPM to create new tables as we haven't already created them. DDL Generation must be set to create-or-extend-tables to do so.

If you are ready with this, press Apply and then OK. You must press Apply before OK as there is still a bug in eclipse, that doesn't save everything if just press OK. Then start the application the first time. It will not come up after creation of the BPM-tables. You stop the application after a while and re-enter the Preferences->Persistence Units and change the BPM settings for DDL Generation to none.

The foodmart application should work now with your own MySQL database.

Working with the H2 DB

As you might know, H2 is a simple but effective small-footprint database without any effort installing it. OS.bee comes with the needed bundles anyway. H2 can be defined as an in-memory database or as a file-based database. If configured as in-memory database the content will be lost as soon as the OS.bee application server is shut down.

- How to create a H2localFile data source

You can use H2localFile for all data sources but make sure to give each data source an individual database name. In this example we want to configure a data source called bpm in order to use it as database for BPM persistence.

- Switch to

Data Sourcesand fill the fields according to the following image:- The database name "~/db" forces the database file to be created in the Windows user's home directory where he has appropriate file creation rights to do this. Of course you can use any directory if you have ensured appropriate rights for this directory.

- User name and password can be chosen according to your own taste.

- The port is free to choose but should not collide with other definitions in your system. The port+1 also should be unused by other services as it will be used by an internal H2 web server as you will see later on.

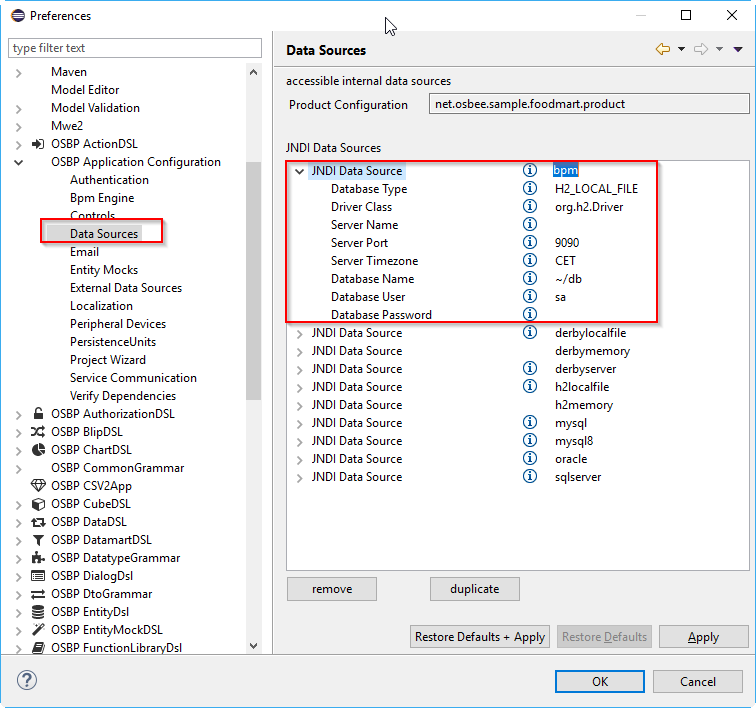

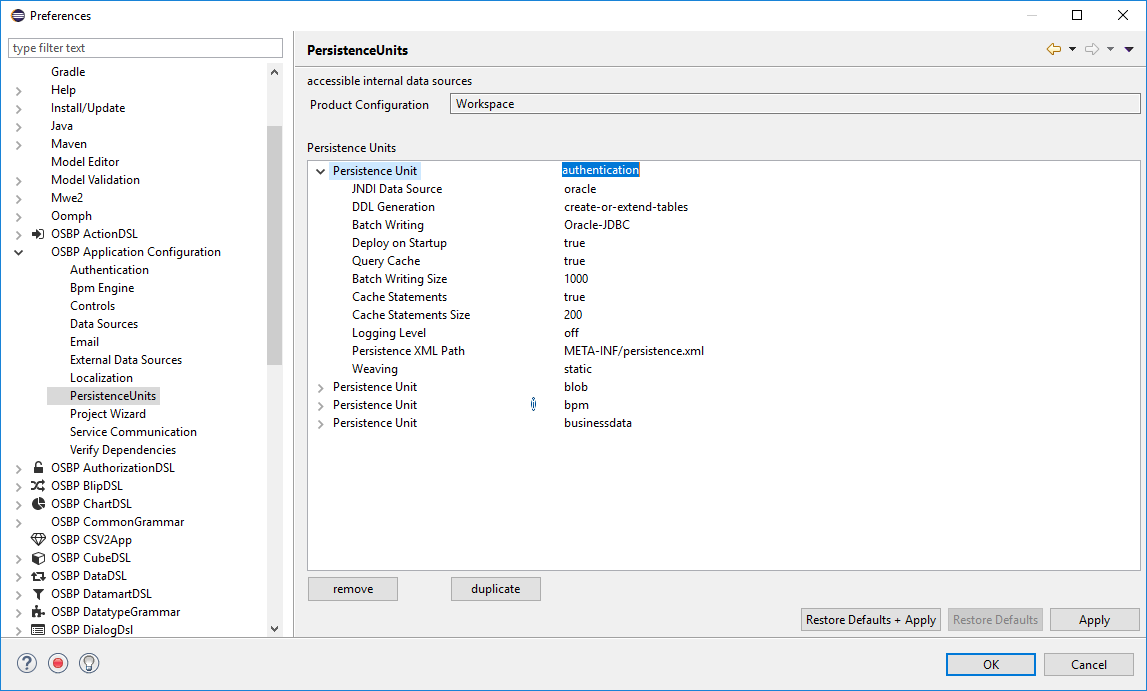

- Done this, you must switch to

PersistenceUnitsand fill the fields for bpm according to the next image:- Make sure to have create-or-extend-tables selected for all persistence units. This will create all tables defined via EntityDSL and will keep them up-to-date as models evolve.

- Logging level can be set to OFF after everything works as expected.

- How to create a H2InMemory data source

- Use the following image to manage the data source settings.

- The only change is the database type. Although there is no physical file with in-memory databases, you have to have a name to identify the database as if it was lying in the user's home directory, if you want to access the in-memory database remotely later.

- Persistence unit settings are the same as above.

- How to inspect H2 database content

If you want to emit sql-statements against the database by yourself, you can use the web-server that was automatically started when using H2. The port is the given port in the data source + 1.

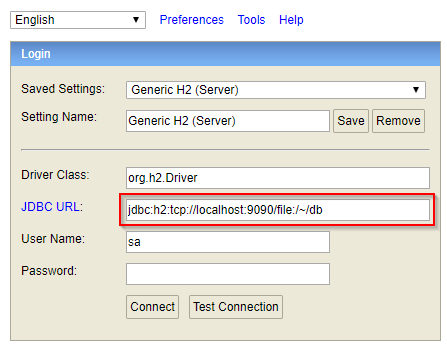

If you open a browser with localhost:<port>, in the example it is: localhost:9091, you will be prompted with this page:

Select Generic H2 (Server) and modify the JDBC URL according the the data source settings. Set the port and the database path for the H2LocalFile type.

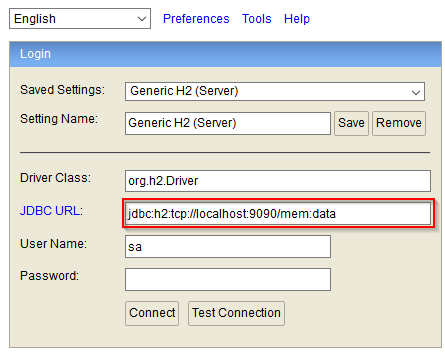

Modify the JDBC URL according to the data source settings for the H2InMemory type:

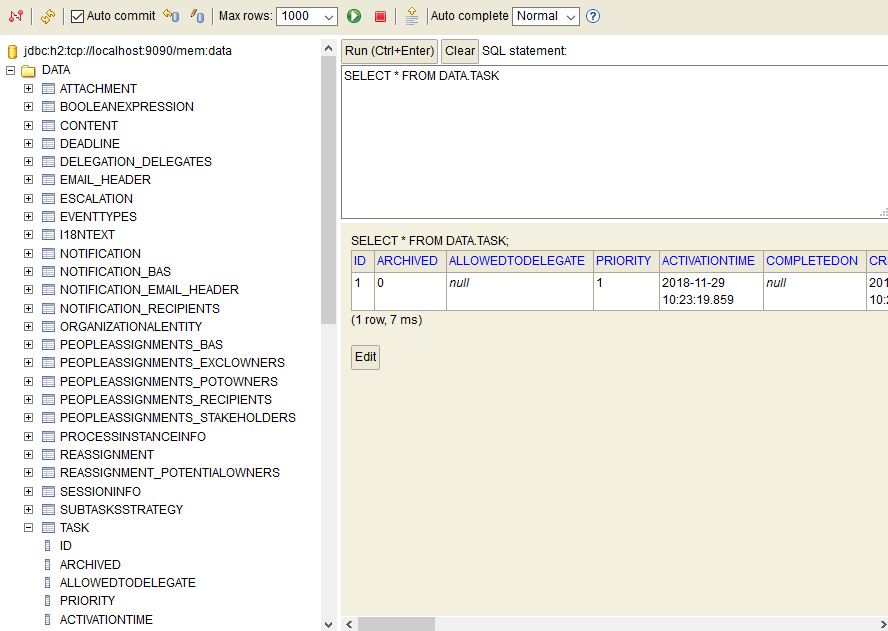

- If the connection is successful for any setting, a new page will show up where the whole database model can be explored and sql statements against the database can be emitted.

Performance tuning for MySQL databases

This topic references the InnoDB version 8 implementation of MySQL. Most important: always use the latest version of MySQL. Versions before 8 are much slower.

- Some simple rules for the design phase in EntityDSL

- Always make an effort to hit an index with your where condition. Hit at least a reasonable quantity (<100) of entries matching with your index.

- Avoid calculations in your where condition as they are calculated for every row that must be selected (e.g. where a+b > 5).

- Do not fan out all possible combinations of indexes. Make one precise index that matches most of the time.

- Avoid repetitions of index segments like

- index 1 a

- index 2 a, b

- index 3 a, b, c

- etc.

- as MySQL will fail to take the best one. Even if you do not have "c" in your condition only create index 3.

- Datainterchange performance issues

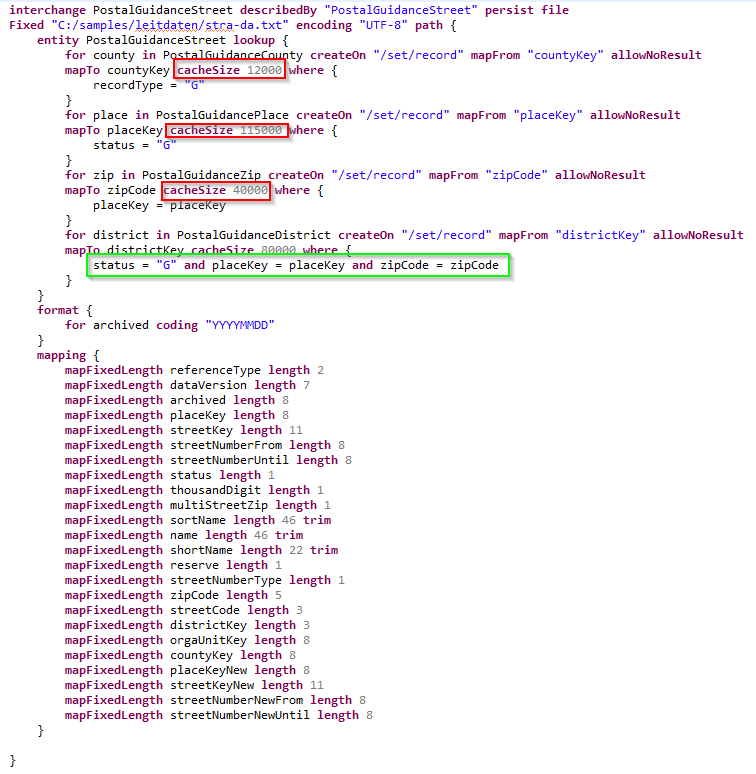

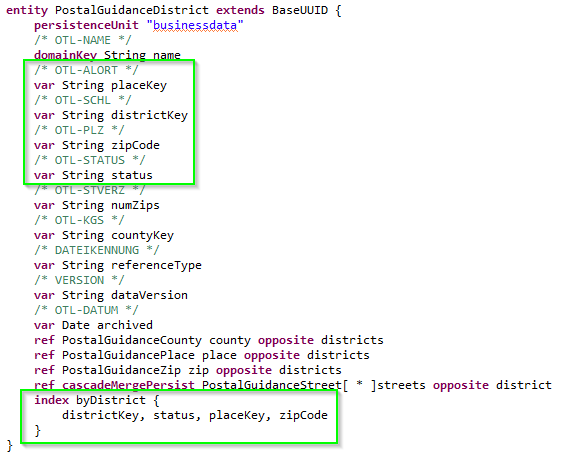

If you make heavy use of the DatainterchangeDSL and your models use lookups to connect to other entities, be sure to use the so called second level cache. Here is an example extracted from the German DHL cargo address validation data:

As county, place and zip are selected for every row to be imported, it is useful to define a 2nd level cache of an appropriate size to hold all entries. Do not oversize the cache as this could result in a garbage collector (GC) exception from a memory full condition. Better a smaller cache than none or an exception during import.

The lookup to find the right district uses 4 values from the imported row. The best approach is to have all the requested fields in the index. For better performance and less problems while importing, it is good to allow duplicate keys here. External data sources are often not that unique as they should be.

The above described method converts given domain keys of the imported streets to surrogate key references via UUIDs.

- MySQL settings

The mysql server comes with a settings file in the hidden windows directory ProgramData. For standard installations you'll find under C:\ProgramData\MySQL Server 8.0 a file called my.ini. Here are changes to boost performance:

- Although it is not recommended by the comment above this setting, you should set

- innodb_flush_log_at_trx_commit=0

- If you can afford it, increase the buffer pool size. Set

- innodb_buffer_pool_size=1G

To make the changed settings to effective you must restart the MySQL80 service.

Modeling

Display of article description

Question:

I would like to match the article number to the name of the article on a dialog box (field is not editable). The grammar of the dialogue gives me little meaningful ways to specify fields. The "autowire" and "view" probably do not help here - the manual does not help me any further.

One more example to make it clearer: A GTIN is scanned - the GTIN appears in the GTIN field and the article description in the Label field, the unit of measure in the Unit of measure field

Answer:

I assume you mean the descriptor of a relation of cardinality many to one in a combo box. For the time being the entries in the combo box are identical with the one which is selected and therefore do not show more information as in the list. If the domainKey or domainDescription keyword at the owner entity of the many to one relationship decorates a certain attribute, this one will be the displayed value in the relationship-combo. As the displayed value is not important for the linking or unlinking of a relationship, but its underlying related datatype, you can also use a synthetic attribute as domainKey attribute. You could create a new attribute in the owner entity combining number and name. In the authorization DSL you could declare it invisible. Let it automatically be combined with a def statement in entity model.

In autobinding, there is no solution for this right now. In the ui dsl you can add bindings as desired. A solution could be the following: we introduce a modifyer (metaflag) to mark an entity attribute which is unexpessive (such as GTIN or item number or sku) without additional information. If the autobinding mechanism detects such an attribute it automatically adds the domainKey and/or domainDescription to the right of the unexpressive attribute. If the marked attribute itself is either a domainKey or domainDescription the respective missing part is added.

Please try the following solution for the number + description problem: create on the one side of a many to one relationship a domainKey attribute where you combine 2 or more attributed virtually. E.g. you name the attribute "productSearch". Then you create a method with the "def" keyword with a preceding annotation "@PostLoad". The effect is that whenever you load the domainKey, the system will call the annotated method and assign whatever you put inside the method to this domainKey attribute. Remember that attributes marked as domainKey or domainDescription will be shown in combo boxes as descriptors for the respective underlying DTO. An entity definition like:

entity ProductClass {

persistenceUnit "businessdata"

uuid String ^id

domainKey String productSearch

var String productSubcategory

var String productCategory

@PostLoad def void fillProductSearch() {

productSearch = productCategory + " - " + productSubcategory

}

}

This will lead to combo box entries that are combined of category and subcategory.

Connecting different database products

You can easily use different database products as far they are supported by JPA and you have the appropriate driver at hand. For every different product you must have a different JNDI definition in your product preferences and you must define a different persistence unit per JNDI data source. Therefore it is not possible to share common relationships between different database products as JPA won't allow to navigate over persistence unit boundaries. The only way to support those projects is to use an Application Server like WebLogic from Oracle or WebSphere by IBM. This is quite expensive for small installations.

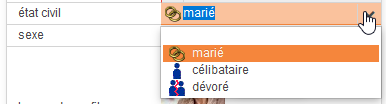

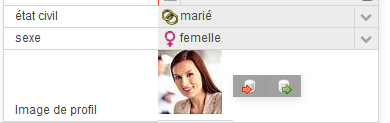

Default Localization

Question:

The Default Localization should be German - how is it adjustable?

Answer:

Any application built with OS.bee first reads the localization properties of the browser the client is running on. This will be the default locale before a user logs in. Every user has its own user account that is serviced by the admin or the user itself when opening the user menu -> profile. A user's preferred locale can be setup in the dialog. After signing in, the locale of the client will be switched to the given one.

CSVtoApp ... Column limitation?

Question:

A CC article pool (article.bag) with all columns of the parameter table can be imported. All columns with content are displayed in Eclipse - but the Create App button does not work. Only when many columns (here from letter b) have been deleted does the button work and the entity is created. Is there a limit? And could the program give a meaningful message if it does not work?

Answer:

There is no known limit with the numbers of columns being imported. But there is a drawback with column names that collide with reserved keywords either with java, models like entity, datamart or datainterchange. So it must be avoided to use names like new, entity, column, attribute and other reserved keywords. AppUpIn5 (formerly known as CSV2APP) will crash without notice if you violate this and there is no possibility to avoid the crash because it is a problem with the underlying framework xtext.

Entering a number without keypad

Question:

I have a field for entering a number as e.g. Counted quantity. This quantity is not to be entered with the number keypad, but via a combo-box. How can I define this field so that the numbers 1 to 1000 are selectable?

Answer:

A strange use-case indeed. Why forcing a user to select from a combo-box of 999 entries? You could validate the user's input more comfortable by using a validation expression in Datatype or Entity DSL. You could use this kind of syntax

- in Datatype DSL:

datatype one2thousand jvmType java.lang.Integer asPrimitive minNumber(01) maxNumber(1000)

- in Entity DSL:

var int [minNumber(01) maxNumber(1000)] unitsPerCase

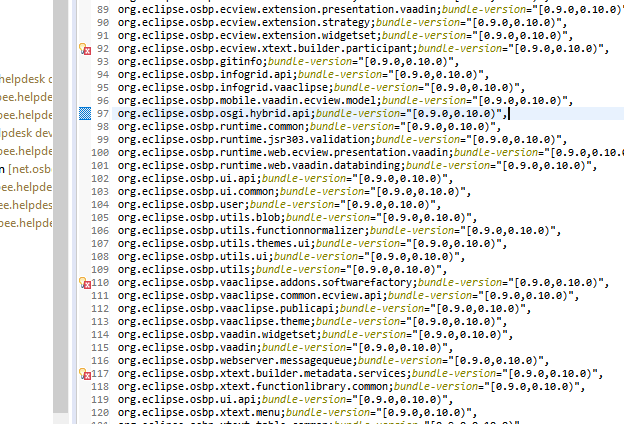

Missing bundles after update and how to solve it

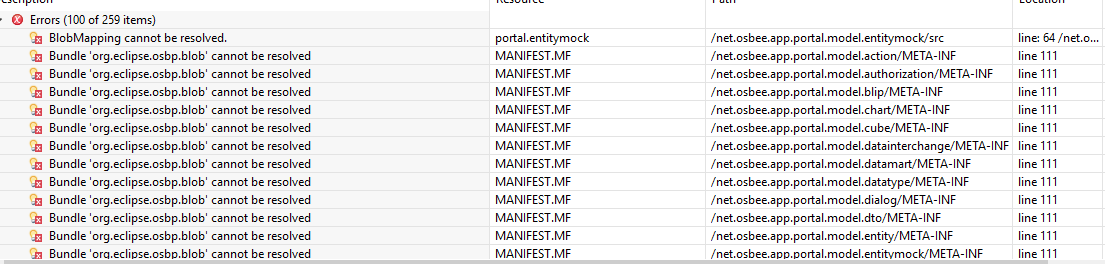

Sometimes, some bundles seem to be missing in the installation after an update has been made. This might look like the following screenshot:

The solution is to check the target definition of the workspace and to update the target with the software from the same repository and the same date as the installation.

Creating CSV files as input for AppUpIn5Minutes with OS.bee

Question:

- Do you have data in a persistence layer as a database that you want to introduce into the OS.bee system?

- Using OS.bee as tool for it?

Answer:

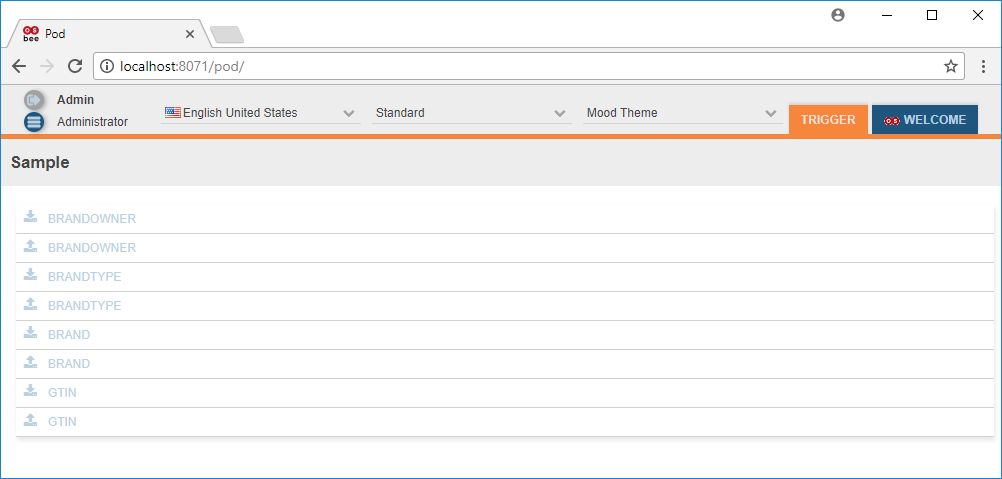

Based on that task we will show it on the example to introduce POD data into our OS.bee system. Therefor several steps are required.

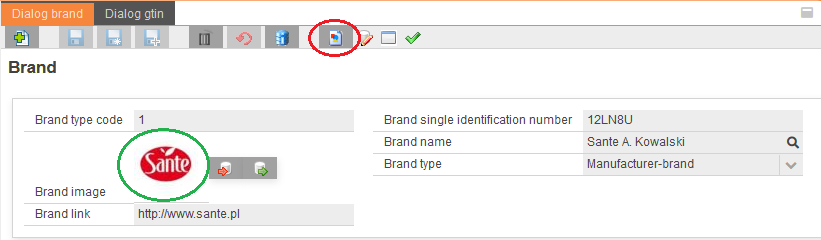

The POD data consist of plenty entities but we will focus our attention only on the entities Brand, Brandowner, Brandtype, Gtin and Pkgtype.

The result of this task is to have CSV files as input for the AppUpIn5Minutes Tutorial.

First step: data import

- In our case the data is available via a SQL file and we have to create first a persistence layer (in our case a MySql database) and put the data into it. Existing a database with data already this first step is obsolete.

1. The first step is to get the original data and to put them into a persistence layer

- The POD data is provided via the SQL file

- We will use MySql as persistence layer and will run this SQL file on the for this occasion created schema pod.

- Now all the corresponding tables are created and filled with the data in our MySql Server.

2.The next step is to prepare the OS.bee Application to be able read the data from the persistence layer

- Running this file on a MySql database all entities are free from a technical key. So due to the requirements of JPA on which our database communication is based a ID is to be added.

- So for each created table from the first step an entity has to be defined manually within an EntityDSL instance.[

- On the example of Brand it will be like this:

entity Brand {

persistenceUnit "businessdata"

var int brandtypecd

var String brandtype

var String brandnm

var String brandowner

var String bsin

uuid String id

var String brandlink

}

- As result a new but empty column ID will be added in the MySql table 'brand' once the OS.bee application will be started and database call for the entity was done.

- Existing relations between the entities we will consider corresponding foreign key columns have to also created manually. In our particular brand example the existing relations are from Brand to Brandowner and Brandtype and from Gtin to Brand and Packagetype.

- So the corresponding foreign key columns within the corresponding entity definitions have to be like this:

entity Brand {

...

var String brandTypeId

var String brandOwnerId

}

entity Gtin {

...

var String brandId

var String packageTypeId

}

- The easiest way to make a first call is to create a trigger view of all entities to export their data via datainterchange and starting an export as explained in the following steps.

- As result new but empty columns will be added once:

- the columns gtin in the MySql table PACKAGE_TYPE_ID and BRAND_ID

- the columns brand in the MySql table BRAND_OWNER_ID and BRAND_TYPE_ID.

- The OS.bee application will be started and database call for the entity was done.

Second step: UI requisites

3. Create a trigger view to export the data via datainterchange

- For the last step to export the structure and content of all the entities into CSV files for each of these CSV files one datainterchange definition in a DatainterchangeDSL instance is required. Therefor create respectively an entry like this:

interchange Brand merge file

CSV "C:/osbee/POD/POD_en/Brand.csv" delimiter ";" quoteCharacter """ skipLines 1 beans {

entity Brand

}

- To make this options visible in the OS.bee application a perspective within a menu is required.

- So we create a trigger view providing all the datainterchange definitions like this:

perspective Trigger {

sashContainer sash {

part pod view dataInterchange datainterchanges

}

}

- And this perspective we put into a menu like this:

entry Menu {

entry Item {

entry POD perspective Trigger

}

}

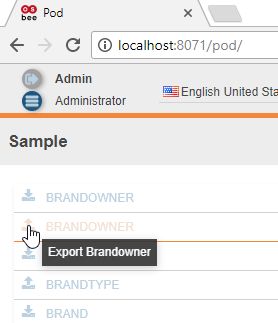

- The result of the view on which an export action will be a database call and so a change on the tables on the MySql server is:

Third step: Data enhancements

4. The following step is to fill the empty UUID columns with data

- To be able to work properly with JPA and to use relations we decided to use UUIDs. So the first step is fill the empty column ID with UUIDs generated from the MySql database with the following command:

UPDATE YourTable set guid_column = (SELECT UUID());

- In case of our example brand, it will be:

UPDATE pod.brand SET ID = (SELECT UUID());

- After that the corresponding relations have to be transformed into UUID foreign keys. Therefor the existing weakly relations have to be used to make strong foreign key constraints. As first step we will fill the foreign key columns of the table gtin.

- The existing relation between Brand and Gtin is based on the attribute Bsin. So the creation of the corresponding foreign key column BRAND_ID have to be done like this:

DELETE FROM pod.gtin WHERE bsin IS NULL; UPDATE pod.gtin g SET brand_id = (SELECT id FROM pod.brand b WHERE g.BSIN = b.BSIN);

- And for the corresponding foreign key column PACKAGE_TYPE_ID like this:

UPDATE pod.gtin g SET package_type_id = (SELECT id FROM pod.pkg_type t WHERE g.PKG_TYPE_CD IS NOT NULL AND g.PKG_TYPE_CD = t.pkg_type_cd);

- The next relation between Brand and Brandtype is based on the attribute brandTypeCd. So the creation of the corresponding foreign key column BRAND_TYPE_ID have to be done like this:

UPDATE pod.brand b SET brand_type_id = (SELECT id FROM pod.brand_type bt WHERE b.BRAND_TYPE_CD IS NOT NULL AND b.BRAND_TYPE_CD = bt.BRAND_TYPE_CD);

- And finally as the relation between Brand and Brandowner is defined over a helper table brand_owner_bsin, the creation of the corresponding foreign key column BRAND_OWNER_ID have to be done like this:

UPDATE pod.brand b SET brand_owner_id=(SELECT id FROM pod.brand_owner bo WHERE bo.OWNER_CD IS NOT NULL AND bo.OWNER_CD=(SELECT owner_cd FROM pod.brand_owner_bsin bob WHERE b.BSIN = bob.BSIN));

Forth step: Export into CSV files

5. The final step is to export all the actual entity structure and their content into CSV files

- Now all the datainterchange entries in the trigger view have to be used to export the corresponding entity structure and their content into the corresponding CSV files.

- Simply push the export button as shown for Brandowner as follows:

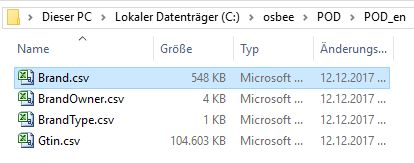

- The corresponding CSV files output as shown here:

import declartion used in most DSL

Question:

Is there an easy way to handle the needed Import declaration? Do we have to begin with the Import declaration while creating a new model can we start with other main semantic Elements of the DSL?

Answer:

Yes - there is an easy way to create the import declarations. You don't have to begin with the declarations. You can use SHIFT-CTRL-O to update the import declarations at any time in a model instance or simply see them showing up during entering the model code. Just start writing your model code, use the built-in lookup functionality with CTRL-<SPACE> to find the available keywords or referable objects and get the imports added during typing. To check if everything is OK use SHIFT-CTRL-O to update the import statements.

Entity DSL (DomainKey DomainDescription)

Question:

What is the effect of using domainKey and domainDescription inside the application? The Documentation shows only the syntax.

Answer:

domainKey and domainDescription classify what describes the description or the key of the domain. As primary keys are always UUID or ID as integer and do not represent human understandable objects, one can use these two keywords. Technically, either the domainKey or the domainDescription leads to a suggestTextField in a dialog rendered via autobinding. SuggestTextField let the user type some letters and will popup a suggestion to be selected. Whenever a reference to an entity with a domainKey or domainDescription is rendered with a comboBox, the classified attribute is used to identify the relationship to the user. If the domain classification is not given, the relationship is not linkable via a comboBox as the system doesn't know which attribute to present to the user. This fact can be used with intent, whenever a relationship is not meant to be changed or seen by a user.

assignment user -> position

Question:

We defined an organisational structure using the DSL organization. While maintaining a user (dialog), the defined positions are not shown in the drop-down list. A dialog base on a predefined dto (org.eclipse.osbp.authentication.account.dtos.UserAccountDto) is used. Is there anything to consider?

organization portal Title "Organigramm Portal" {

position Administrator alias "Administrator_1" {

role AdminIT

}

position projectleadinternal alias "Project Lead Internal" superiorPos Administrator {

}

position projectleadexternal alias "Project Lead External" superiorPos Administrator {

}

position projectmemberexternal alias "Project Member External" superiorPos projectleadexternal {

}

}

Answer:

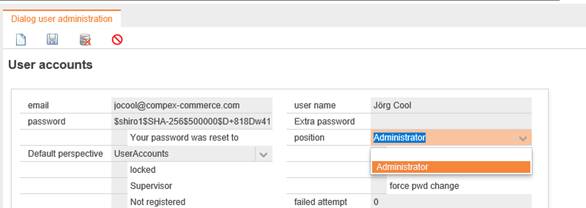

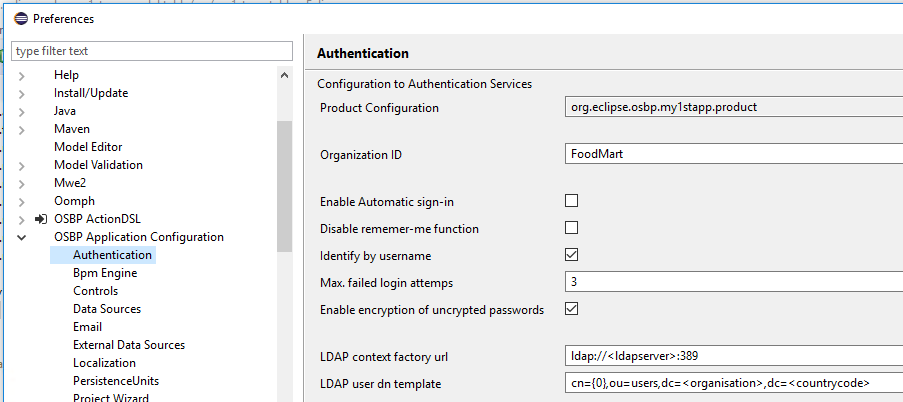

The combo box only shows positions from the Organization DSL if the "Organization and Authorization" component was licensed. If this is not the fact, the default role Administrator is shown as every user has administrator rights without this component. This could happen if you installed OSBP instead of OS.Bee. Otherwise, the name of the organization has to be deposited in the eclipse Preferences --> OSBP Application Configuration --> Authentication, in field Organization ID:

I18N.properties (Reorganization of obsoleted values)

Question:

Each DSL has its own I18N.properties to translate the values. The property file includes values that are no longer used in the Modell-Description. (For example based on an addition correction of orthography)

The update of the properties seems to be one-way. Is there a function to reorganize the values (with the target to drop all obsoleted values)?

Answer:

No, there is no way to delete unused entries for security reasons. Translations to foreign languages are expensive. The modeler is responsible to delete obsoleted entries.

Display values from ENUMS in Table

Question:

We are using a perspective with the combination of table and dialog to maintain master data. The dialog contains combo-boxes to select values based on ENUMS. The selected value is displayed in the dialog, but is not displayed in the table. Is there a way to display the values of the selected ENUM in the table, with the objective to use the filter?

Answer:

This is not yet implemented but on schedule.

References to images

Question:

Using for example MENU.DSL Documentation:

package <package name>[{

expandedImage <expandedImage String>

Keyword expandedImage and collapsedImage is used to define the menu image when the menu expanded or collapsed. Where can we find the valid names of the Images to use in the String?

Answer:

The images can be selected by using hitting <CTRL>+<space> right behind the keyword. This will lead you to the image picker. Double-click on "Select icon...“ , then you can select the image from the image pool. The corresponding name will be added to the model. If you want to add own images to the pool follow How to add pictures.

Perspective with master/slave relationship

Question:

We created a perspective with a "master" table and 5 related "slave" tables. The target is to select one row in the master table and automatically filter the data rows in the slave tables related to the selected data. Where can we find information about the way to realize this goal?

Answer:

With "master" you probably mean an entity that has relative to the "slave" an one2many relationship. Views of their underlying tables will synchronize if they share a filter with the selection of another table. The filter must use the same entity as the entity of a table that selected a row. So every datamart of a "slave" must have a condition in a many2one join where the "one" side is the entity that should synchronize while selecting rows. In effect the selected row tries to change all filters to the same ID if the same entity is used. This applies to all open views in all open perspectives. In datamartDSL, using the keyword "filtered", the result in the application is a combo-box in the header of the table, which allows to select a value which is used as a filter of all tables of the perspective which have use the same condition; using the keyword “selected”, the result will be a list-box.

Perspective use border to show boundary for each sash

Question:

Is it possible to show border between each sash-container used in a perspective? From our point of view the arrangement is not pretty clear to the user. It could be helpful to get a clearer view to show (optional) real visible border between sash-container and even between parts. for example:

- green border between parts of a sash-container

- blue border between sash-container

Is this already possible?

Answer:

For the moment there are no plans to colorize borders by means of grammar keywords. It is possible by changing the CSS for the currently selected theme.

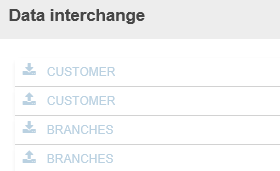

Datainterchange Export (does not overwrite existing file)

Question:

We defined an interchange for a specific entity. The created TriggerView is called in a perspective, which is called in the menu. We also used an adjusted dialog with a toolbar with the export command. When the defined target filesystem is empty, the export file was created. A second try to export the data does not create a new file. Is there something to consider to allow an "overwrite" of existing data?

Answer:

Datainterchange tries not to overwrite already exported files and appends a running number to the end of the filename. When it is not work, you have to debug. The Action-buttons of the created Trigger-View are accidently colored as disabled but they are not. This will be changed in the future.

If you think they are very pale (not easy to read). It is also possible to define the layout of the buttons.

Logged in user as part of the data-record

Question:

We want to create an entity, where the logged in user, who insert a record is referenced in each data-record that be created. Is there a way (function or something else) to handle this request?

Answer:

This request is already a ticket.

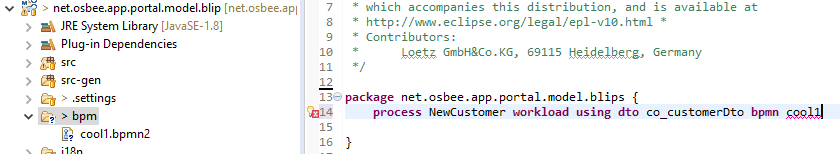

bpmn2 file reference in blip-DSL

Question:

There is no hint about where to save the *.bmpn2 files. So we created a subfolder in net.osbee.app.XXX.model.blip, which is named of “bpm”. Using the new subfolder we created a new jBPM Process Diagram. As the description during the creation we remove "defaultPackage." as Prefix for Process-ID: The model is simple (Start-Event / User-Task / End-Event) When we try to reference the bpm2 model inside the blip-dsl, the name could not be resolved. Is there something to take care about?

Answer:

You must right click the new folder “bpm” and when the mouse is over "Build Path", select "use as source folder". After this is done you should press STRG+SHIFT+O in the Blip DSL to "organize imports".

How to add the missing icon images to a new created ENUM combo box

Question:

Defining an ENUM at the entity DSL model instance, a corresponding combo box is created, but the corresponding icon image for each ENUM component is missing.

Answer:

For the solution have a look at How to add pictures. We would like to enforce the usage of icons in OS.bee. So if you use EnumComboBox without images, there will be a "missing icon" icon appears in the combo-box, it was introduced with intent to remind the designer that there is a missing icon. There is still a problem, in the case, for example there are 10 Enum values per Enum type and 10 Enum types are used in an entity, 100 image names must be generated and deposited. Even if all use the same picture. This takes a lot of effort and costs time - just writing the file names per picture. Some of the designer wants to use EnumComboBox without images. Although great user experience comes from great effort, for designers who want to hold on to the slipshod way we will introduce a setting in OS.bee preferences to avoid displaying icons at all when at least one of the entries of a combo box is missing. For a pretty user experience we must decide between text and icon per combo box.

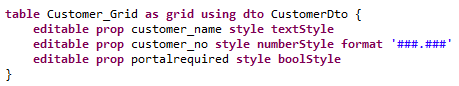

table DSL (as grid) create new data records

Question:

Is it possible to create new data records while using a grid? Existing data records can be changed for defined attributes (editable prop) using a double-click on the data row What must be changed to allow the creation of data rows?

Answer:

This is not yet possible, but on schedule.

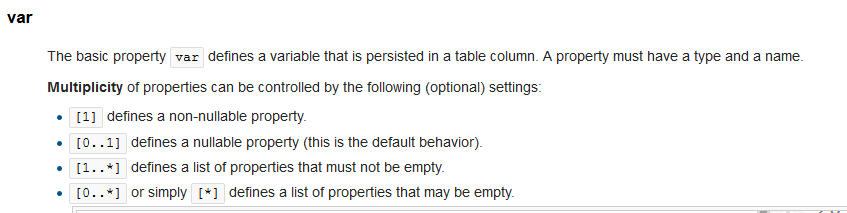

DSL entity (Multiplicity of properties)

Question:

We defined an entity for customer data, with the goal that some attributes must be entered during creation of new data-records. To use only the property seems not to be enough to prevent a data-record to be saved, without filling non-nullable attributes. What have to be done to reach the goal?

Code-example:

var int [1]customer_no

var String [1] customer_name

var boolean portalrequired

var String country

Snippet of documentation

Answer:

This is a very interesting question. So I will explain some important facts about binding and validation (formerly known as Plausi). As OS.bee implements the Model-View-Controller pattern, UI fields, business logic and data layer are strictly separated. The link between them is realized by a "bean binding" mechanism. This mechanism propagates changes between the MVC elements in every desired direction. There is a layer between this mechanism which is called bean validation. For every direction of binding a validation can be implemented to avoid or allow a certain change. Even conversions between values from model to presentation and vice-versa are implemented in the binding mechanism. There are some pre-defined validation rules available in the Datatype DSL. As we follow the domain concept to define things as close to the base of the DSL hierarchy as possible, this is the place to go for validations.

For your request, you must define a new datatype as following:

datatype StringNotNull jvmType java.lang.String isNotNull[severity=error]

You can apply a validation rule to every "non-primitive" jvmType (jvm = Java Virtual Machine): Double, Integer..., but not for double, integer... Validation rules are cumulative. The severity of the user response on violation of the rule is definable in 3 flavors:

- info

- warn

- error

Each of them stylable via CSS. The following validation rules are available right now:

- isNotNull

- isNull

- minMaxSize (applies to Strings)

- maxDecimal and minDecimal (applies to Double, Long and Integer - Float is not supported by OS.bee)

- regex (regular expression must be matched - for the advanced designer)

- isPast (applies to date)

- isFuture (applies to date)

► Examples:

datatype DoubleRange jvmType java.lang.Double maxDecimal (10.00) minDecimal (20.00)

datatype String5to8Chars jvmType java.lang.String minMaxSize(5,8)

As a last step you must use the newly created datatype in your entity to let the validation work. There is one additional validation accessible as keyword from the Entity DSL. If you want to enforce the uniqueness of an attribute value like "fullName" you can use the unique keyword in the entity.

► Example:

entity Employee extends BaseID {

persistenceUnit "businessdata"

domainKey unique String fullName

When you are about to save a newly created entry, during bean validation the database is accessed and it is verified, that the given attribute content is not already in the database. Else a validation error with severity error appears in the front-end.

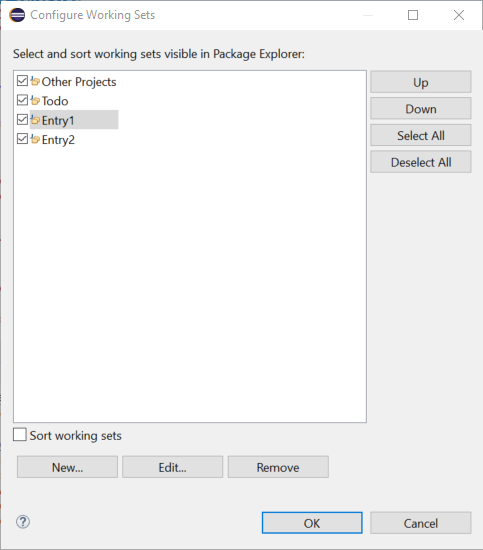

UI-Design

Question:

Is it possible to design a Combo-box/List-box where I can sort the entries by myself? Like in eclipse > Configure Working Sets... Here I ‘m able to select Up / Down to sort the working sets.

In the moment I realize this in the entity with the field listPrio:

entity Title extends BaseUUID {

persistenceUnit "businessdata"

domainKey String

title var

int listPrio

}

Then I sort the entries by giving a value for listPrio. But this is not a good solution.

Answer:

Sorry, not for this time. Sounds useful. I will create a ticket for it.

DSL Dialog: Autosuggestion for DomainKey or DomainDescritpion

Question:

I develop an address management. In the Entity DSL, I have the fields:

domainKey String decription

var String firstname

var String lastname

If I create a user:

firstname: Hans

lastname: Maier

Is it possible to get for the field description automatically suggested the value: Hans-Maier? Like a kind of derived or Operations in Entitiy DSL, but only for suggestion.

Answer:

Yes. After the definition of all attributes, you have the possibility to define methods that can be called by JPA thru annotations.

domainKey String description

...

@PostLoad

def void makeFullName() {

description = firstName+"-"+lastName

}

Remember that decription is completely transient. You could also use @PrePersist or @PreUpdate.

DSL Table

Question:

I have a Table with 100 address data.

Is there a possibility of multi-selection?

► Example of selection of 5 addresses:

- I want to delete in one step 5 addresses.

- I want to send to these 5 addresses the same email.

Answer:

Not for the OSBP version. If you have the BPM option, then the token for BPM can be built using a selectable table. The resulting workload could send emails or deletes against the data base in the following system task.

datamart DSL (condition filtered) table does not refresh

Question:

In a perspective we use organigram / table (user) / dialog (user). The table based on a datamart using the semantic element conditions. Not all position in the organization have assigned user. When a position with assigned user is selected the data rows of the table are filtered. When a position is selected (where no user is assigned) the table does not refresh. What can we change in the model to refresh the table even when there is no corresponding data?

Answer:

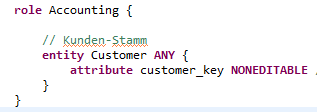

DSL authorization (CRUD)

Question:

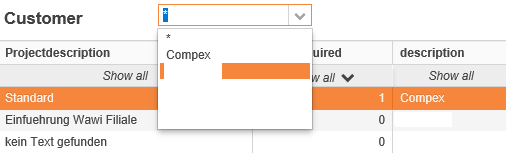

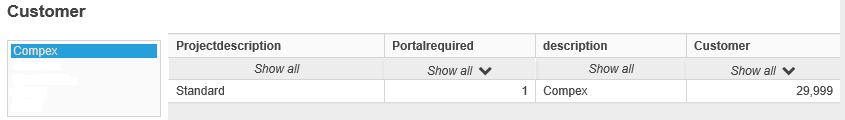

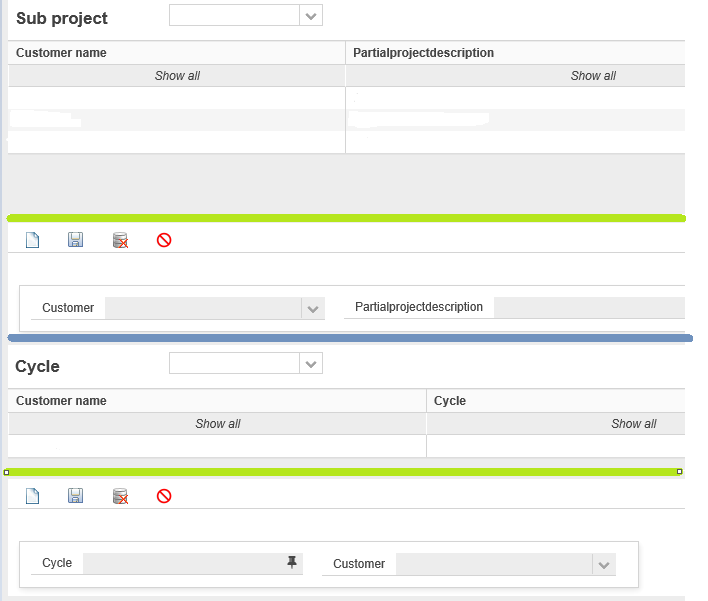

We tried to authorize the CRUD operations for some position in different ways. All CRUD operations for a given entity "Customer"

Assumpiton:

- For each positon there i a user available

- Each position has an assigned rolte (organization)

- Both user uses the same perspecitve (table/dialog)

- The dialog uses a general toolbar with the necessary actions

Login with user assigned to Position Accounting, create a new datarow (new Customer) and save the data (o.k) Login with user assigned to Position ProjectIntern, select the new Customer in the table, activate delete Button (Row deleted not expected) Is there something to take care about we neglected?

Answer:

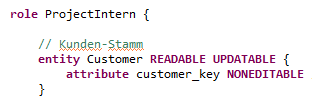

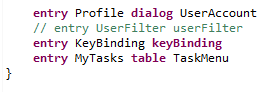

DSL Menu(UserFilter/SuperUser)

Question:

When a User marked as SuperUser logged in our Application and the Menu-Modell contains a definition for UserFilter. The Menu is no longer shown inside the application.

The generated Menu in the application looks like this:

If the UserFilter line is included and the application restarted, after login, the application looks like this: (User,Position, and Menu are not visible)

The entity UserAccount and UserAccountFilter have been consider in authorization-DSL with Value ANY assigned to the role used by the user with attribute SuperUser.

Answer:

Printer Selection (Default Printer)

Question:

The Combo-box (Client-Toolbar) for the print-service shows all Printers from the client operating system. The default printer of the operating system is not the preselected for the application. It is possible to define a print-service for each user within the dialog UserAccount? Is it possible for an administrator to select Print-Service for foreign user without having all print-services on his local operating system? Or is it possible to preselect the default print-service insinde the client?

Answer:

DSL Entity Enum with own images

Question:

We tried to create own images for Enum-Values following the document How to add Pictures hosted using URL:

► Example:

enum TypeDocument {

PDF, DOCX }

entity Customer {

persistenceUnit "businessdata"

domainKey String customerKey

id int no

var boolean portalrequired

var String country

var TypeDocument documenttyp }

Own Fragment Bundle

Question:

- There is no hint about image sizes, are there some restrictions?

- There is no preferred way to transfer the created images into the folder (We use Copy and Paste, are there other ways?)

The application started from within Eclipse, Create new data-record using a dialog, the created images are not shown inside the Combo-Box. Can you help me about what went wrong or what is missing?

Answer:

How to synchronize views

Synchronizing tables with dialogs is quite usual for OS.bee. If they share a common entity and they are placed on the same perspective, this is done automatically.

You can now even synchronize tables with reports or tables with tables. They must have an identifier column in common. If so, they are synchronized without the need of a common datamart condition. The receiving view must have the keyword "selectById" on it. Every table emits a selection events of all id columns in the datamart. Not only the id value of the root entity, but also relations emit its id value when displayed in a table and selected.

If you have a datamart of a table with entities related like A->B->C, the selection event emits the values of the selected row of A.id, B.id and C.id. If there is another view on the perspective which uses A, B, or C as root entity, it will automatically synchronized.

There are 2 prerequisites that must match that the receiving view will synchronize automatically:

- the relation of the source view that should work as synchronizing element for the target view must appear as opposite relation in the datamart of the target view.

- the target view's datamart must have at least one normal attribute to show in the associated view.

How to get new features from your toolbar

As you probably know, ActionDSL creates commands, tool items and toolbars. There are some new features that you could use to enhance the usability of your dialog designs. Besides the classic buttons to save, delete, restore or create new entries, there are two new possibilities:

- save and new

- save as new

save and new is quite simple: after saving the dialog will enter the new-entry-mode again and re-uses the previously selected sub-type of the underlying DTO if there was one. The normal save will stay in editing-mode after save was processed.

save as new allows to make copies of the currently selected entry. If there are no uniqueness validations on the dialog, the copy is exactly the same as the previous entry, except for the internally used ID field. If there is a need to create a lot of new entries which are similar to an already existing entry, this button will help reducing time to enter new entries.

How are these new buttons created?

- create 2 new commands in ActionDSL (you could use your own keybinding shortcut)

command saveAndNew describedBy "save and new" keyBinding "CTRL ALT A" dialogAction SaveAndNew command saveAsNew describedBy "save as" keyBinding "CTRL ALT F" dialogAction SaveAsNew

- add the new commands to your toolbar which is used in your dialogs

toolbar Dialog describedBy "Toolbar for dialogs" items { ... item saveAndNew command saveAndNew icon "dssaveandnew" item saveAsNew command saveAsNew icon "dssaveasnew" ... }

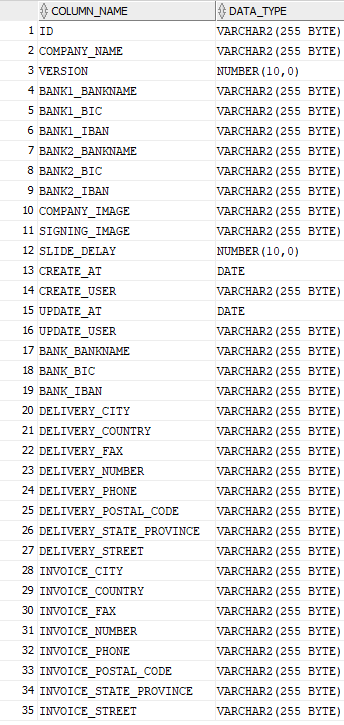

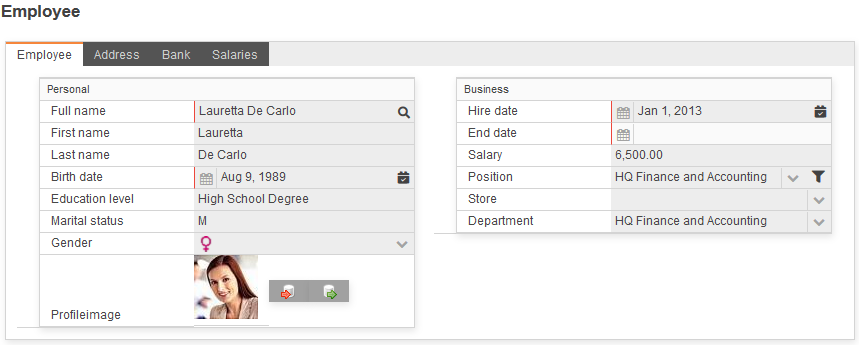

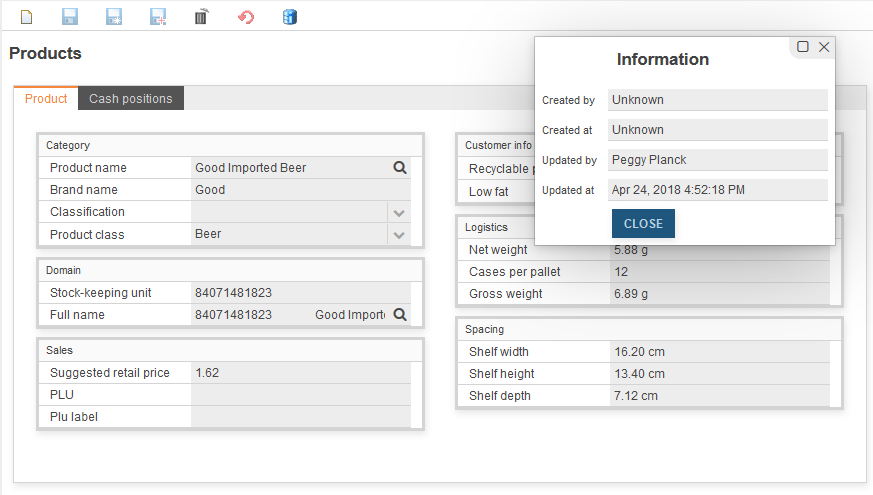

Add user information to your CRUD operations

Sometimes it is desired to have some information persisted concerning the user and the date when an entry was created or modified. This is how this is to be implemented: First you must add the necessary attributes to the entities where this information is needed. If you use a mapped superclass for all entities, it makes it very simple to add it to all entities at once. These attributes must be annotated with the appropriate tag so that the generators know what you want (supply metadata).

Here is an example if using mapped superclasses:

mappedSuperclass BaseUUID {

uuid String id

version int version

@CreateBy

var String createUser

@CreateAt

var Timestamp createAt

@UpdateBy

var String updateUser

@UpdateAt

var Timestamp updateAt

}

The same thing also works for dedicated entities as well. If the EntityDSL inferrer discovers one of the annoations @CreateBy, @CreateAt, @UpdateBy or @UpdateAt it checks the following attribute definition and if the datatype matches the annotation the JPA mechanism will enter the requested information into the new or modified record. So be careful with the datatype, the ...By annotation expect a String to follow, the ...At annotation a Date type.

- How to make it visible to the runtime-user?

You must supply a toolbar command in ActionDSL to enable the user to show the information:

command databaseInfo describedBy "database info" keyBinding "CTRL ALT I" userinterfaceAction Info

Then you must extend your dialog toolbar with the new command:

toolbar Dialog describedBy "Toolbar for dialogs" items {

...

item databaseInfo command databaseInfo icon "dbinfo"

}

You will end up with this toolbar button:

If the user clicks this button or uses its shortcut, a popup window appears showing the requested info. As the user selects different entries and leaves the popup open, its content will refresh according to the underlying information.

Designing dialogs using multiple columns

Generally there are two ways to define dialogs in OS.bee:

- using UI model manually

- using autobinding automatically

The advantage of using UI model is that you can create sophisticated nested layouts and use more than one DTO to bind from even if they don't have a relationship. The disadvantage is that you have to layout and bind manually. If DTO changes, you must also change the depending UI models.

The advantage of using autobinding is that you have nearly nothing to do if your dialog exactly follows the DTO description. A mechanism collects all available metadata from the underlying entities/DTO and tries to render a suitable dialog. The disadvantage is that you can't change layout nor the look.

Except for a new feature in DialogDSL. You can tell the dialog to render a multi-column layout. Just enter the keyword "numColumns" and a number in the dialog grammar.

dialog Products describedBy "Products" autobinding MproductDto toolbar Dialog numColumns 2

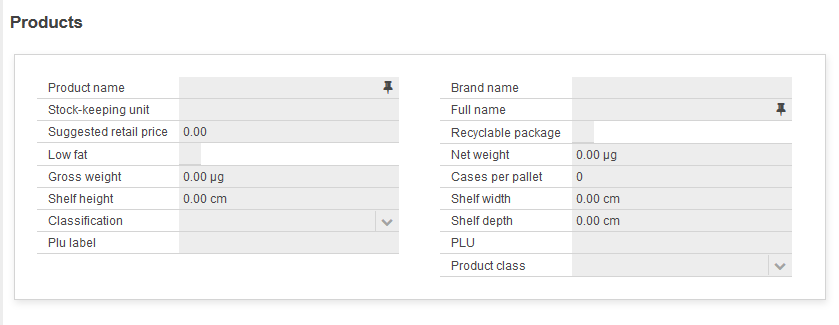

This will result in a 2-column layout like this:

This is how it looks with 3 columns:

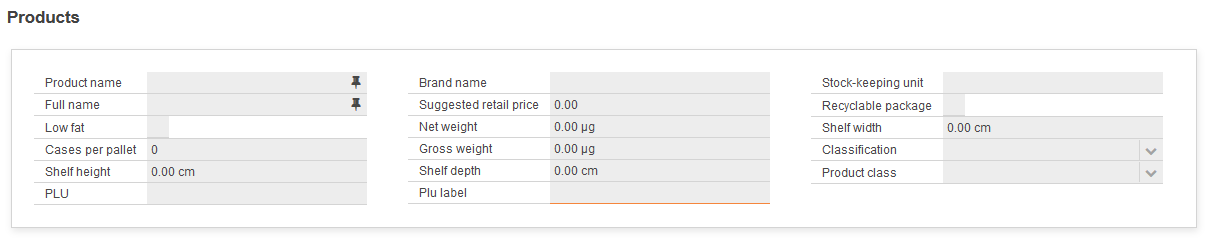

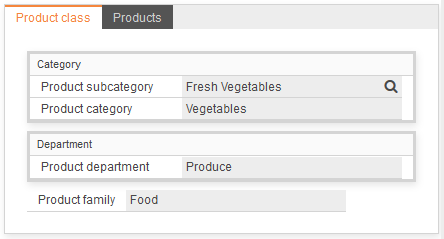

Grouping attributes on dialogs

Sometimes there is the need to cluster fields on dialog to logical groups. Thus enforcing readability and better understanding of complex data entry forms (dialogs).

To enable the designer to do so, there is a new keyword "group" followed by an id in the grammar of EntityDSL. The layouting strategy logic of OS.bee finds common ids and collects the attributes together no matter in which order they appear in the entity. The group id cannot have blanks or special characters. The id is automatically added to the i18n properties of EntityDSL in order to be translatable.

Grouped and non-grouped attributes can appear mixed on a dialog. An example for the definition is here:

entity Mproduct_class extends BaseID {

persistenceUnit "businessdata"

domainKey String product_subcategory group category

var String product_category group category

var String product_department group department

var String product_family

ref Mproduct[ * ]products opposite product_class asGrid

}

The dialog is defined like this:

dialog Product_class describedBy "Product Class" autobinding Mproduct_classDto toolbar Dialog numColumns 1

The resulting dialog at runtime look like this:

As you can see the field "product family" is not grouped and the one-to-many relationship to products is rendered on a separated tabsheet because the keyword "asGrid" is used for the reference definition.

Here a more complex example for products with a 2-column layout:

entity Mproduct extends BaseID {

persistenceUnit "businessdata"

domainDescription String product_name group category

var String brand_name group category

var String sku group domain

domainKey String fullName group domain

var double srp group sales

var boolean recyclable_package group customerinfo

var boolean low_fat group customerinfo

var MassGRAMMetricCustomDecimal net_weight group logistics

var int units_per_case group logistics

var int cases_per_pallet group logistics

var MassGRAMMetricCustomDecimal gross_weight group logistics

var LengthCMMetricCustomDecimal shelf_width group spacing

var LengthCMMetricCustomDecimal shelf_height group spacing

var LengthMetricCustomDecimal shelf_depth group spacing

var ProductClassification classification group category

var PLUNumber plu group sales

var String pluLabel group sales

ref Mproduct_class product_class opposite products group category

ref Minventory_fact[ * ]inventories opposite product

ref Msales_fact[ * ]sales opposite product

ref CashPosition[ * ]cashPositions opposite product asGrid

@PostLoad

def void makeFullName() {

fullName = sku+"\t"+product_name

}

index sku_index {

sku

}

index plu_index {

plu

}

}

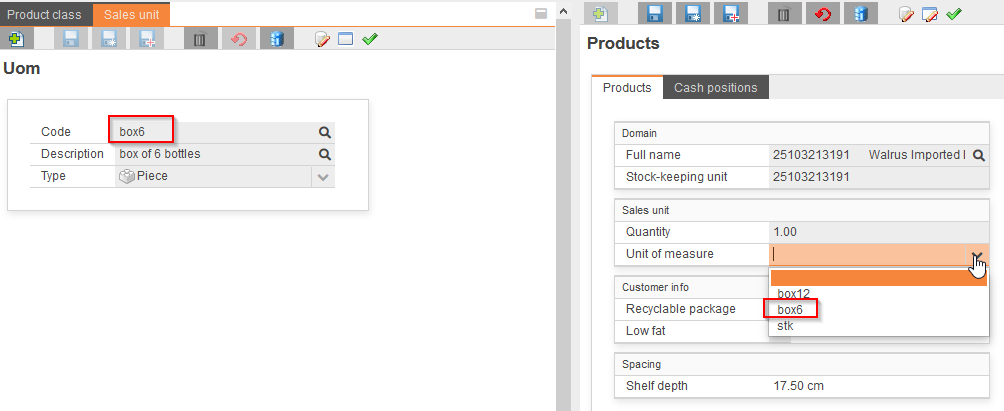

How units of measurements are handled

Often it comes to the situation that a certain value is bound to a unit of measurement. Lengths or masses are common examples. OS.bee supports units of measurement by using a framework called UOMo.

This dialog shows the usage of UOMo in the "Logistics" and "Spacing" group:

If you enter a big value into "Net Weight" for example, the logic will convert it to another unit in the same unit-family:

So there is some business-logic for uom implemented under the hood. When you look at the entity of this attribute, you'll find:

var MassGRAMMetricCustomDecimal net_weight group logistics

and in DatatypeDSL it look like this:

datatype MassGRAMMetricCustomDecimal jvmType java.lang.Double asPrimitive

properties (

key="functionConverter" value="net.osbee.sample.foodmart.functionlibraries.UomoGRAMMetricConverter"

)

So what you can see is that the basic type is Double and a converter handles the uom stuff. Let's look at the FunctionLibraryDSL for this definition:

converter UomoGRAMMetricConverter {

model-datatype Double presentation-datatype BaseAmount

to-model {

var localUnitFormat = LocalUnitFormatImpl.getInstance( presentationLocale );

var baseUnit = localUnitFormat.format( MetricMassUnit.G ) var suffix =( presentationParams.get( 1 ) as String ) if( suffix === null ) {

suffix = baseUnit

}

if( localUnitFormat.format( MetricMassUnit.KG ).equals( suffix ) ) {

var amount = MetricMassUnit.amount( presentationValue, MetricMassUnit.KG ) return amount.to( MetricMassUnit.G ).value.doubleValue

}

else if( localUnitFormat.format( MetricMassUnit.MG ).equals( suffix ) ) {

var amount = MetricMassUnit.amount( presentationValue, MetricMassUnit.MG ) return amount.to( MetricMassUnit.G ).value.doubleValue

}

else {

return presentationValue

}

}

to-presentation {

var amount = MetricMassUnit.amount( modelValue, MetricMassUnit.G ) if( modelValue > 1000d ) {

amount = amount.to( MetricMassUnit.KG )

}

else if( modelValue < 1d ) {

amount = amount.to( MetricMassUnit.MG )

}

return amount as BaseAmount

}

}

You discover for this function a logic separated in two parts:

- to-model

- to-presentation

As the function must provide a logic to convert database values to the UI (presentation), and after the user changed a value, back to the database (model), it has two parts. The 2. part is called the inverse function. Each part tries to find the most suitable unit for the given value. The base-unit is defined as metric gramm and all other values stay in the same family. As a result the value is stored in base-units.

While you play around with units of measurement you'll find out that you could easily build converters between families but also to convert from imperial unit systems to metric (SI=Système international d'unités ) and vice versa.

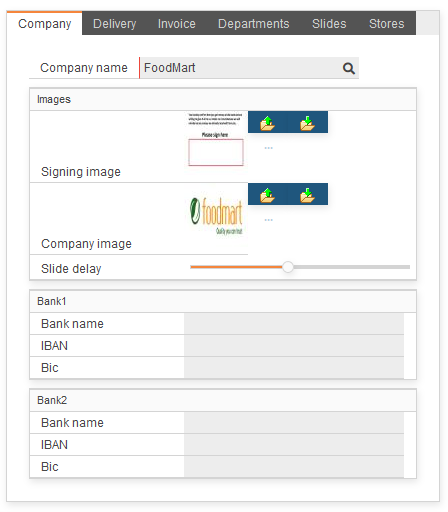

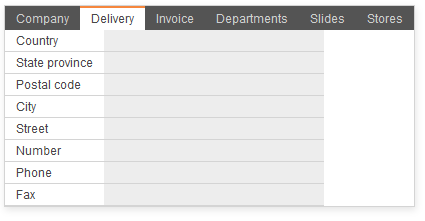

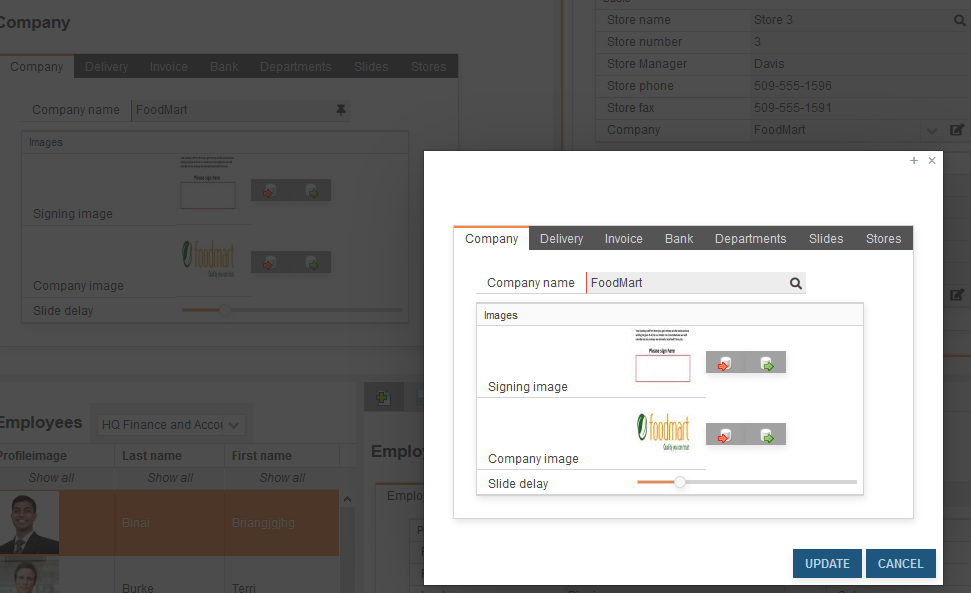

How sliders can improve the user experience with your dialogs

Sliders are well known to adjust analogue values in the world of audio.

If you want to use a similar technology to let your user adjust values in an analogue manner, you can use sliders. Just define a new datatype based on a numeric primitive type and supply it with the minimum and maximum values for the adjustable range.

datatype Slider_1000_2000 jvmType int

properties(

key="type" value="slider",

key="min" value="1000",

key="max" value="2000"

)

Use the new datatype in an entity:

var Slider_1000_2000 slideDelay

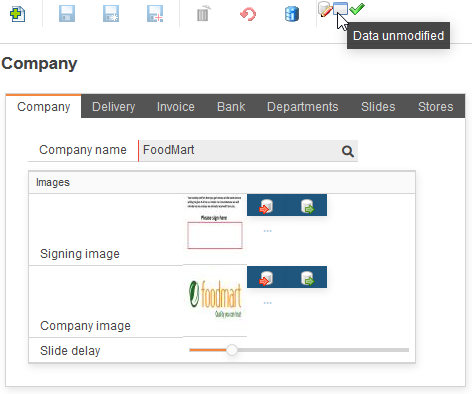

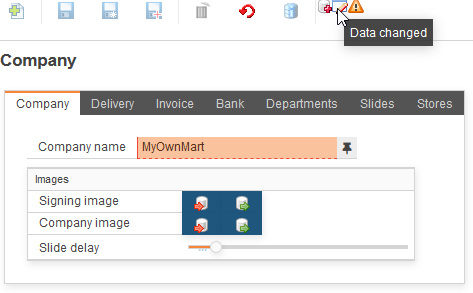

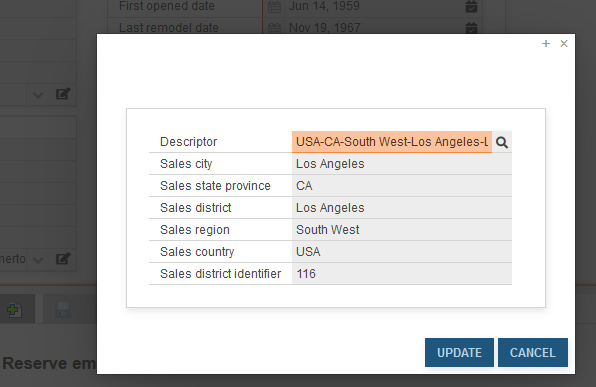

The resulting dialog looks like this:

It looks kindof more convenient instead of entering a number between 1000 and 2000.

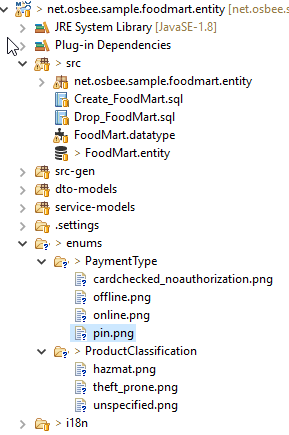

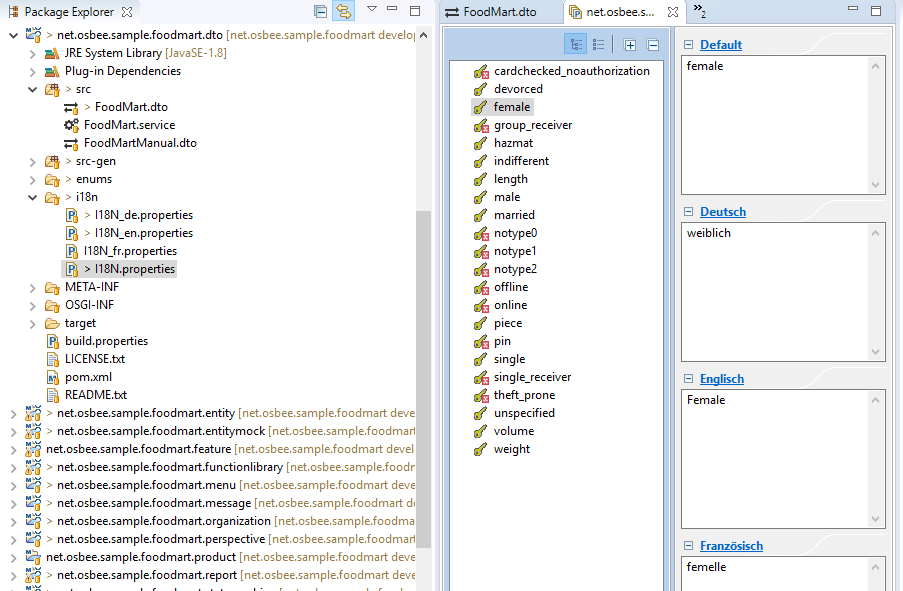

New ways to supply icons for enum literals

Icons are now automatically generated when the entity model is generated. In every location of entity models a folder "enums" will be created and one sub-folder for each type of enumeration. Every literal of the enum will create a png-file with size 1x1 white pixel. This makes it easy to supply a custom icon for every enum literal located near the definition. You just copy a 16x16 pxel sized png file over the generated one. There is no need to suppress icons if no icons are needed as they are invisible when generated 1x1 pixel sized.

After generation the folders look like:

After you have overridden the icons, the combo box looks like this:

IMPORTANT: you must modify your build.properties like described here.

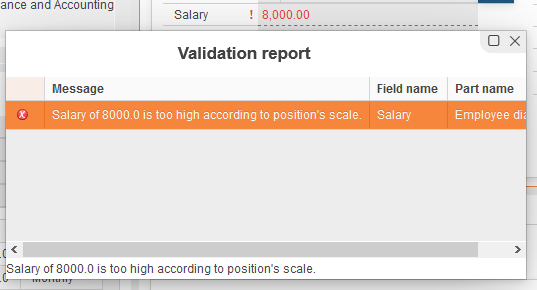

Validation

If you deal with storing data for later usage, you'll be confronted with the fact that users or imports sometimes enter data that could be invalid for later processing. To avoid these problems you must validate data before it is stored. The necessity of a generic validation upon bean-data was detected in 2009 and the JSR303 was created. Built on this specification Apache created a framework to fullfil the specification BeanValidation.

OS.bee exploits this framework and grants access to some validation annotations by using a grammar extension in DatatypeDSL and EntityDSL. Therefore a kind of business logic is implemented by using validations. Naturally validation keywords are datatype specific and not all can be used everywhere.

The violation of a validation can be signalled to the user in 3 different levels of severity:

- INFO

- WARN

- ERROR

where only ERROR prevents data from saving to database.

The following validations per datatype can be used (either in DatatypeDSL or in EntityDSL):

- For all datatypes

- isNull invalid if value was set

- isNotNull invalid if value was never set

- Boolean

- isFalse invalid if value is true

- isTrue invalid if value is false

- Date/Time/Timestamp

- isPast invalid if date lies in the past in reference of today

- isFuture invalid if date lies in the future in reference of today

- Decimal (1.1, 1.12 ...)

- maxDecimal invalid if decimal exceeds the given value

- minDecimal invalid if decimal underruns the given value

- digits invalid if decimal has more digits or more fraction digits than the given 2 values

- regex invalid if the value does not match the given regular expression

- Numeric (1, 2, ...)

- maxNumber invalid if number exceeds the given value

- minNumber invalid if number underruns the given value

- minMaxSize invalid if number is not in the given range of 2 values

- regex invalid if the value does not match the given regular expression

- String

- regex invalid if the value does not match the given regular expression

The messages prompted to the user come in a localized form out of the Apache framework.

An example for a regular expression is this:

var String[ regex( "M|F" [severity=error]) ]gender

Here an example for a date validation:

datatype BirthDate dateType date isNotNull isPast[severity=error]

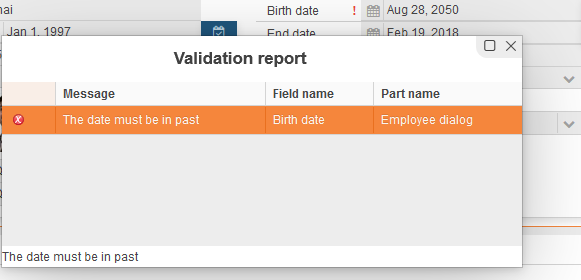

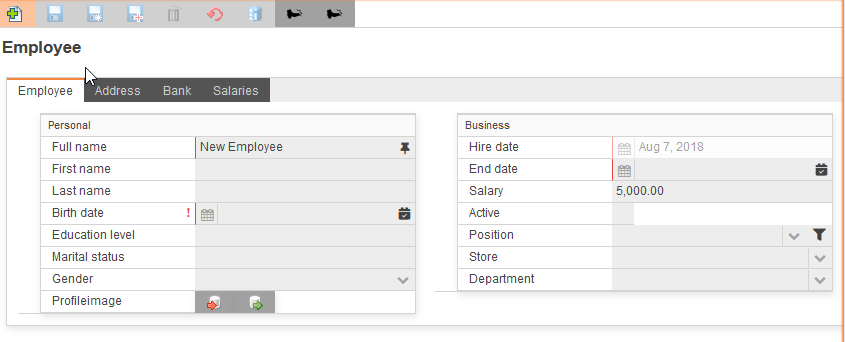

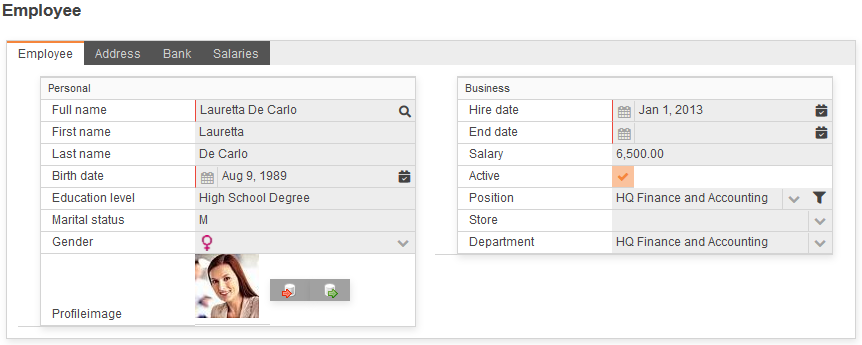

The violation of this rule looks like this:

If you point at the exclamation mark beside this field after closing the Validation report you will see:

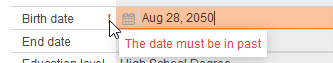

For EntityDSL there is an extra keyword to validate if an entry is already in the database or not. You can use it if you want unique entries in a certain field.

domainKey unique String full_name

If there is a violation of this rule, the dialog looks like this:

This also works for normal fields that are not domainKeys.

Extended Validation

To enforce business rules can be a sophisticated task for traditional software projects. With OS.bee it is possible to create a DTO validation with the FunctionLibraryDSL with less effort. As DTO build up dialogs in autobinded mode, you get a dialog validator for free.

These are the steps to create one:

- create a validation group in the FunctionLibraryDSL and name it like the DTO that you want to validate followed by the token Validations:

validation MemployeeDtoValidations { ... }

- MemployeeDto would be the DTO to validate.

- create inside the named validation group methods that should be processed every time a save button on a dialog is pressed or validate is called from somewhere else:

validate highSalary(Object clazz, Map<String, Object> properties) { ... }

- In clazz the DTO to validate is given. You could access data that is related to this DTO to validate e.g. the max salary allowed like this:

var dto = clazz as MemployeeDto if(dto.salary > dto.position.max_scale) { ... }

The properties map can be used to get some contextual information and services.

properties.get("viewcontext.service.provider.thirdparty")

gives access to the EclipseContext and therefore to a lot of services registrated there. Use the debugger to get more information.

var map = properties.get("viewcontext.services") as Map<String,Object>

var user = map.get("org.eclipse.osbp.ui.api.user.IUser") as IUser

gives access to the current user's data. To distinct the validation for a certain user role, you could use this code:

if(!user.roles.contains("Sales")) { ... }

Every validate method must return either null if there is no validation rule violated or a Status. The Status is created as following:

var status = Status.createStatus("", null, IStatus.Severity.ERROR, "salaryTooHigh", dto.salary)

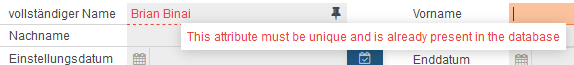

where the first 2 parameters are optional and not explained here. The 3. parameter selects severity (here: error). The 4. parameter is the translation key for the properties file and the last parameter is an optional value that could be integrated into the translated message. Remember that all translation keys are decomposed into lowercase keys with underscores for compatibility reasons. So the key "salaryTooHigh" results in a key "salary_too_high". You could than create a translation like this:

{0} works as placeholder where the last parameter is inset. The appropriate message looks like this in the dialog:

So, the complete code for the business rule: "Salaries must be in a range defined by the employee's position record and can't be violated except for users with the role "Sales" who can exceed the upper limit but not below the lower limit." looks like this:

validation MemployeeDtoValidations {

validate highSalary(Object clazz, Map<String, Object> properties) {

var dto = clazz as MemployeeDto

if(dto.salary > dto.position.max_scale) {

var map = properties.get("viewcontext.services") as Map<String,Object>

var user = map.get("org.eclipse.osbp.ui.api.user.IUser") as IUser

var IStatus status

if(user.roles.contains("Sales")) {

status = Status.createStatus("", null, IStatus.Severity.ERROR, "salaryTooHigh", dto.salary)

}

status.putProperty(IStatus.PROP_JAVAX_PROPERTY_PATH, "salary");

return status

}

return null

}

validate lowSalary(Object clazz, Map<String, Object> properties) {

var dto = clazz as MemployeeDto

if(dto.salary < dto.position.min_scale) {

var status = Status.createStatus("", null, IStatus.Severity.ERROR, "salaryTooLow", dto.salary)

status.putProperty(IStatus.PROP_JAVAX_PROPERTY_PATH, "salary");

return status

}

return null

}

}

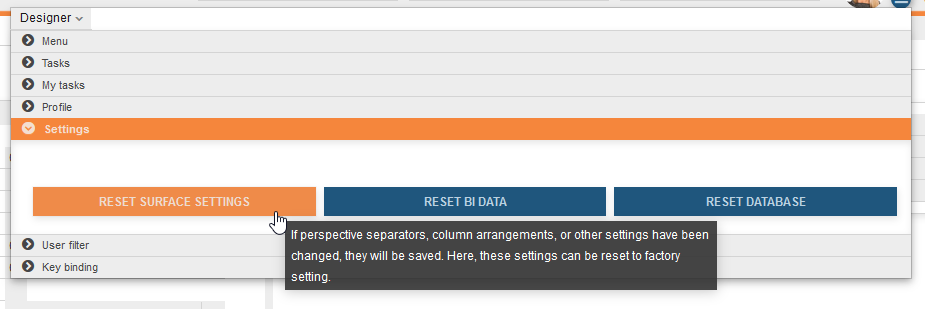

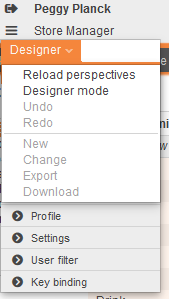

Reset cached data

In order to provide a responsive and modifiable user interface, some data is cached while some data is stored to the database. In case you need to reset this data, there is a new keyword in MenuDSL to provide a small dialog where this can be done.

category Settings systemSettings

If you have done so, the resulting menu will look like this:

The tooltip will provide additional information about what can be reset here. For the moment there are 3 option:

- reset surface settings

- modifications done by the current user during runtime are stored and restored with the next usage of the application. Modifications comprise

- splitter positions in perspectives

- column order in tables

- column width in tables

- column hiding intables

- reset BI data

- the underlying framework for BI data is Mondrian that makes heavy use of caches for cube related data. Whenever data changes through the use of external tools, the cache will not be reset automatically. This can be done here.

- reset database

- the underlying framework JPA als makes use of caches. For the same reason as with Mondrian, its cache can be reset here.

Therefore, it is no longer needed to restart the application server if data was changed by SQLDeveloper or TOAD or similar tools, just press reset caches here. And, if you are unsatisfied with your private settings for the surface, reset it here to factory settings.

WARNING: If you press "reset database" or "reset BI", the reset comprises the whole application with all currently connected sessions and users. BI analytics and database access will react delayed until all caches are rebuilt.

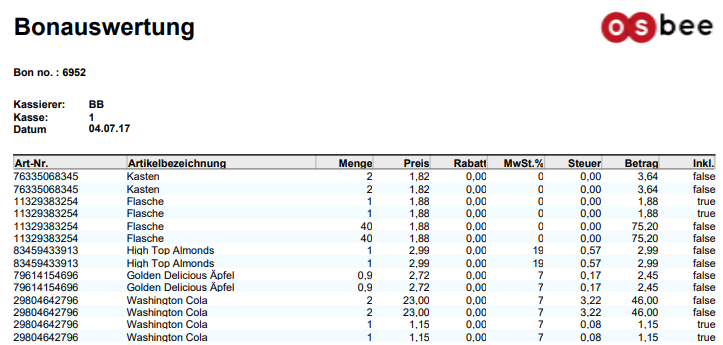

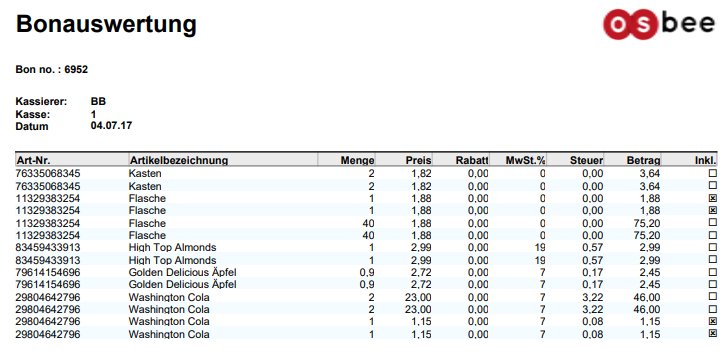

ReportDSL: How to get a checkbox for a Boolean attribute

The common outputs for a boolean attribute are the strings "true" or "false". As you can see in the following report using the attribute:

entity CashPosition ... {

...

var boolean taxIncluded

...

}

But enhancing the attribute with the property checkbox as shown here:

entity CashPosition ... {

...

var boolean taxIncluded properties ( key = "checkbox" value = "" )

...

}

the report output for the same boolean attribute is like this:

How to collect business data and presenting meaningful statistics with OS.bee - INTRODUCTION

Before one can present and interpret information, there has to be a process of gathering and sorting data. Just as trees are the raw material from which paper is produced, so too, can data be viewed as the raw material from which information is obtained.

In fact, a good definition of data is "facts or figures from which conclusions can be drawn".

Data can take various forms, but are often numerical. As such, data can relate to an enormous variety of aspects, for example:

- the daily weight measurements of each individual in a region

- the number of movie rentals per month for each household

- the city's hourly temperature for a one-week period

Once data have been collected and processed, they are ready to be organized into information. Indeed, it is hard to imagine reasons for collecting data other than to provide information. This information leads to knowledge about issues, and helps individuals and groups make informed decisions.

Statistics represent a common method of presenting information. In general, statistics relate to numerical data, and can refer to the science of dealing with the numerical data itself. Above all, statistics aim to provide useful information by means of numbers.

Therefore, a good definition of statistics' is "a type of information obtained through mathematical operations on numerical data".

| Information | Statistics |

|---|---|

| the number of persons in a group in each weight category (20 to 25 kg, 26 to 30 kg, etc.) | the average weight of colleages in your company |

| the total number of households that did not rent a movie during the last month | the minimum number of rentals your household had to make to be in the top 5% of renters for the last month |

| the number of days during the week where the temperature went above 20°C | the minimum and maximum temperature observed each day of the week |

Business analysis is the term used to describe visualizing data in a multidimensional manner. Query and report data typically is presented in row after row of two-dimensional data. The first dimension is the headings for the data columns and the second dimension is the actual data listed below those column headings, called the measures. Business analysis allows the user to plot data in row and column coordinates to further understand the intersecting points. But more than 2 dimensions usually apply to business data. You could analyze data along coordinates as time, geography, classification, person, position and many more.

OS.bee is designed for Online analytical processing (OLAP) using a multidimensional data model, allowing for complex analytical and ad hoc queries with a rapid execution time. Typical applications of OLAP include business reporting for sales, marketing, management reporting, business process management (BPM), budgeting and forecasting, financial reporting and similar areas.

Study this excellent guide for a deeper understanding of cubes, dimensions, hierarchies and measures:Beginner's guide to OLAP .

How to collect business data and presenting meaningful statistics with OS.bee – PART1

The storage and retrieval containers

In a nutshell:

- we store data using entities and relationships

- we retrieve information using cubes and dimensions.

Storage with entities

The backbone of statistics is a container for quantitative facts. In this tutorial we want to create statistical data upon cash-register sales. We call the container for these facts SalesFact'. It inherits from BaseUUID therefore providing a primary key and some database information and saves data within the persistence unit businessdata:

entity SalesFact extends BaseUUID {

persistenceUnit "businessdata"

/* actual net revenue */

var double sales

/* net costs of the goods and costs for storage */

var double costs

/* quantity of goods sold */

var double units

}

Leaving the container as is we could aggregate some measurements but we have no idea of when, where and what was sold. So we need additional information related to this event of sale. We call it a coordinate system for measures or just a dimension.

entity SalesFact extends BaseUUID {

persistenceUnit "businessdata"

/* actual net revenue */

var double sales

/* net costs of the goods and costs for storage */

var double costs

/* quantity of goods sold */

var double units

/* what product was sold */

ref Mproduct product opposite salesFact

/* when was it sold */

ref MtimeByDay thattime opposite salesFact

/* to whom it was sold */

ref Mcustomer customer opposite salesFact

/* was it sold during a promotional campaign */

ref Mpromotion promotion opposite salesFact

/* where was it sold */

ref Mstore store opposite salesFact

/* which slip positions were aggregated to this measure (one to many relationship) */

ref CashPosition[ * ]cashPositions opposite salesFact

/* which cash-register created the sale */

ref CashRegister register opposite salesFact

}

Please don't forget to supply the opposite sides of the reference (relation) with the backward's references:

ref SalesFact[ * ]salesFact opposite product

...

ref SalesFact[ * ]salesFact opposite thattime

...

ref SalesFact[ * ]salesFact opposite customer

...

ref SalesFact[ * ]salesFact opposite promotion

...

ref SalesFact[ * ]salesFact opposite store

...

ref SalesFact[*] salesFact opposite register

...

ref SalesFact salesFact opposite cashPositions

Let's have a look at a very special container, the time. The date attribute is not enough. You must amend some additional information and therefore functionality so it becomes a usable dimension:

entity MtimeByDay extends BaseID {

persistenceUnit "businessdata"

var Date theDate

var String theDay

var String theMonth

var String theYear

var String theWeek

var int dayOfMonth

var int weekOfYear

var int monthOfYear

var String quarter

ref SalesFact[ * ]salesFact opposite thattime

@PrePersist

def void onPersist() {

var dt = new DateTime(theDate)

theDay = dt.dayOfWeek().asText

theWeek = dt.weekOfWeekyear().asText

theMonth = dt.monthOfYear().asText

theYear = dt.year().asText

weekOfYear = dt.weekOfWeekyear().get

dayOfMonth = dt.dayOfMonth().get

monthOfYear = dt.monthOfYear().get

quarter = 'Q'+((month_of_year/3)+01)

}

index byTheDate {

theDate

}

}

As you can see from the code, the given date theDate is used to calculate other values that are useful for retrieving aggregates of measures using a dimension like time with the level quarter or theYear. If we want to use a "Timeline" as dimension for statistics from OLAP, we also need to create an entry and a relation to the MtimeByDay entity.

How are these calculations invoked?

Due to the annotation @PrePersist at the method declaration of onPersist, JPA calls this method every time before a new entry in MtyimeByDay is inserted. Be careful inside these methods: if an exception is thrown due to sloppy programming (e.g. null pointer exception), nothing in the method will be evaluated. Here are the other entities we need later: